@mastering92

People make donations on ASR. Long ago, there was proof of collusion between said site admin and now-popular Chi-Fi audio brands (execs and designers) on various forums. Of course, before starting ASR, those tracks were paved over....so anyone who thinks ASR is an audio science charity is fooling themselves.

The only people fooling themselves are those believing such nonsense. Here is the background on this.

The rumor started on SBAF forum. Some guy found a distributor for Topping products who had the same *first name* as me, i.e. Amir. That is like thinking anyone called John is like any other person called John in US! He then made another preposterous claim that Topping and SMSL are the same company. This is completely untrue. These are fierce competitors and are not at all part of any company, holding or otherwise.

I pointed this out on ASR a few years ago when this came up and have heard anyone repeat it until now.

So to be clear, I have zero business relationship with any audio company, Chinese or otherwise. I retired successfully from technology industry more than a decade ago and am in no need of commercial benefits in that manner. My methodology for recommending audio products is based for the most part on objective measurements which cannot be subject to bias in that manner even if I did have a connection with them.

So please don't go spreading such rumors, much less to say there is any kind of "proof." If you have proof, present it. Otherwise, you are just showing how little it takes for you to believe in complete fantasy.

|

@1extreme

They have so little regard at ASR for actually listening to components that they don’t even bother listening to them. Amir performs all these tests and I don’t believe he listens to a single one or even has a reference system to drop the component in to get an impression.

You need to put aside "belief" and substitute reality. I listen to huge amount of audio gear as part of my testing. Every speaker, headphone, headphone amp and even some audio tweaks such as power conditioners, cables, etc. have listening tests. This adds up to about 200 devices a year that get reviewed with listening tests.

I have a reference system as well where equipment is tested. For example, I used RME ADI-2 Pro ($2000) driving a $4000 Dan Clark headphone to test a headphone amp. Cables are tested the same way if they are interconnects and such. Ditto for power conditioners, etc. Here is an example from this week alone, a headphone amp:

"This is a review, listening tests and detailed measurements of the Eleven XIAudio Broadway balanced battery operated desktop headphone amplifier. "

Far field devices like speakers get tested in and against my nearly $100K main audio system. Power is provided by $20K in amplification for example. I list these prices not that they should matter, but I suspect matter a lot to you as to what makes a "reference system."

But no, I don't listen to everything. Measurements so powerfully describe the performance of such devices, showing impairments well below hearing for example, where it makes no sense for me to listen to them. And proceed to make up stuff like subjective reviewers do. Mind you, if measurements show a problem, I do listen even in that category. Here is an example of that, the PS Audio DirectStream DAC:

https://www.audiosciencereview.com/forum/index.php?threads/review-and-measurements-of-ps-audio-perfectwave-directstream-dac.9100/

"I started the testing with my audiophile, audio-show, test tracks. You know, the very well recorded track with lucious detail and "black backgrounds." I immediately noticed lack of detail in PerfectWave DS DAC. It was as if someone just put a barrier between you and the source. Mind you, it was subtle but it was there. I repeated this a few times and while it was not always there with all music, I could spot it on some tracks.

Next I played some of my bass heaving tracks i use for headphone testing. Here, it was easy to notice that bass impact was softented. But also, highs were exaggerated due to higher distortion. Despite loss of high frequency hearing, I found that accentuation unpleasant. WIth tracks that had lisping issues with female vocals for example, the DS DAC made that a lot worse."

You could have figured all of this out by simply going and looking. But instead you just repeated incorrect talking points.

|

@normb

Basic measurements are only a benchmark, an objective standard, but how something SOUNDS is purely subjective and has to take into account intangibles like combined elements in the system, the room acoustics, speaker placement, and the listener, right?

Yes and no. In most cases, audio gear is designed to have its own performance or you would never be able to assemble any system. Or trust any reviewer whatsoever, right? For example, a source device such as a DAC has a low impedance of say 100 to 200 Ohm. The pre-amp then has an impedance of at least 10X that. This way you get full voltage transfer which is what we want.

On the other hand, there are tube amps with high output impedance which then interact with the frequency response of the speaker. This causes tonality to shift in the system not because of anything good, but because of poor design. A solid state amp will have well below 1 ohm impedance as to eliminate this effect. You could hear these effects using either listening tests (if the person is properly trained and difference large enough), or measurements.

In vast majority of cases though, the modular aspect of audio allows us to independently test and evaluate a component by itself. Measurements are much more powerful in this regard because audiophiles as a group are terrible at detecting non-linear artifacts. But even for things like speakers where distortions are apparent, we are a) mostly alike when it comes to preferences and b) non-professionally trained listeners including reviewers and dealers are terrible at providing consistent and proper feedback. Please see this formal study:

Indeed, the subjective data from audio reviewers is so bad that you need 10 times as many of them to equal properly and formally trained speaker listeners! That is the problem with subjective remarks from audiophiles or audiophile press. It is so unreliable that it is not worth paying attention to. The same study by the way shows that listener preference is similar among a dozen different listener classes:

See how the ranking of each speaker did not matter (different colors) regardless of who was listening to it (X axis). Green speaker for example was bad no matter who was evaluating it, in controlled tests that is.

If you want to see a more detailed explanation of that, I have done a video on it:

|

@cleeds

I really don't think ASR has much power or influence at all. It's just a noisy group with grievances, which is very common today.

Well, I know more than one company that has completely retooled their entire product development and product strategy precisely because of the work we have been doing (think Schiit). So we do have influence, having nearly 3 million visitors a month which puts us at or at the top of all audio based sites on the Internet.

The main grievances, as evidenced by threads like this, are people who don't want to come along. They rather cling to "beliefs" than accept reality of objective and science based evaluation of audio. Why? Because in some cases it negates their praise for some product. Instead of learning from that, they choose to go after ASR. And for what? Us providing more data than you had before about your purchases? There is no better definition of asking, heck demanding, to put one's head in the sand.

By all means, do that but don't make up stuff about ASR. At least do some homework before creating FUD like this.

|

@1extreme

I used to follow their rankings because I thought well maybe at least they would identify a component that is a disaster but no longer. Why, because a few months ago I started listening again to my MHDT Orchid Tube DAC which as you probably all know is an R2R ladder DAC and I was amazed at the quality of music it was producing over my delta sigma DAC’s, especially with acoustical music like traditional jazz which I mostly listen too. Out of curiosity I looked on the ASR site and Amir had ranked the MHDT Pagoda DAC, which is the same as the Orchid DAC but with XLR outputs as the WORST DAC THEY HAVE EVER MEASURED. They have the Pagoda DAC at the farthest right in the red scale with the lowest ranking. Never even bothered listening to it.

Here is the problem you have with your argument: anyone with measurement gear can verify my findings. No one can do that with your subjective claim. Maybe you are right, but we don't know. We don't know because you didn't follow any protocol to make sure you are only evaluating the sound of the device and nothing else.

We need to know for example that the knowledge of a DAC being R2R didn't influence your perception. We need to know if you match levels when evaluating audio gear. We need to know if you repeated your testing enough to arrive at reliable results. We all know that in casual listening tests like yours, any and all perceptions can exist. I can listen to said R2R DAC and convince myself that it sounds like you say without said controls. Indeed I routinely perceive differences that are not there. We know they are not there because measurements show evidence of that, and blind controlled listening confirms the same.

This is what separates us and why ASR has Science in the middle of it. Audio science with zero ambiguity says your evaluation of audio is faulty and without value. When folks with those claims are tested formally, they cannot repeat their outcomes. It is for this reason that claims like yours cannot be part of any paper for example submitted to Audio Engineering Society, ASA, etc.

As with other aspects of life, you can be a science denier and live your life happily. Just don't put that opinion forward as an argument in mixed company. And certainly don't use it in a thread aiming to create FUD against the other camp.

|

@cleeds

Exactly. The measurements and data have little value if not correlated with what we hear.

They absolutely are correlated with what you hear based on science of psychoacoustics which is entirely based on listening tests. Problem you all have is that you don't to hear, pun intended, the consequences of that. Instead you want to live in a fantasy world where differences that don't exist or are below audible, are so obvious that your wife can hear from the kitchen. That kind of made up effect is psychological and can never be caught or explained in a proper, science based, review of audio product.

|

@invalid

@thespeakerdude how would you know if say an amplifier is audibly transparent in a listening tests when listening through speakers or headphones when no headphones or speakers are transparent?

Answering for him :), you look at measurements. If frequency response is flat and independent of load, has distortion and noise below threshold of hearing, then you can very confidently declare is transparent. This analysis assumes perfect speakers. To the extent the speaker is not, then the job gets easier and hence the reason amps with noise and distortion above threshold of hearing are also declared as transparent.

If anyone challenges you saying they hear the difference on their speakers/headphones, then you can perform a level matched blind testing test. if they can't prove any audible difference in such a test, then the case is closed with respect to their claims.

|

@mastering92

Would you consider having your test results/measurements verified by other industry experts? I don’t see a problem with that. If I were you, I would welcome 3rd party validation of my work.

Consider it? That is like asking a Chinese person if he would consider eating rice! My measurements get verified by manufacturers every day of the week and twice on Sunday. You think these companies just lay low and ignore what I am producing?

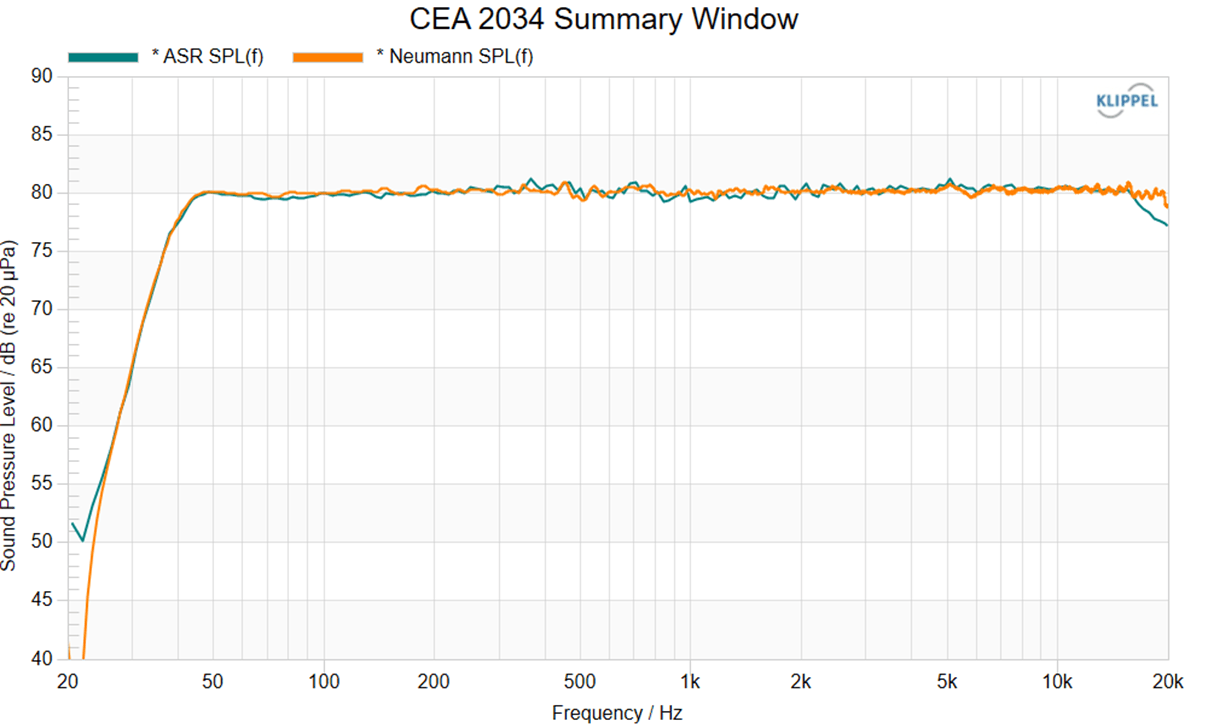

Countless companies have asked for my Audio Precision project files and I happily provide it to them so that they can make the same measurements. To the extent I have a relationship with a company, I even offer the measurements for them to verify prior to publication. Here are some quick examples staring with a German company you should know: Neumann:

"EDIT: received an overlay of Neumman measurements and mine:

Here is Denon:

"I grabbed a preliminary set of measurements from the DAC section of the 3800H and ran it by the company. Within typical margin of error, the measurements were the same as company's own."

Here is American company RSL:

"I was contacting the company regarding another product so while I was at it, I asked them to review the frequency response measurements. They are generally in agreement with the results you are about to see."

We also have heavyweight reviewers and engineers with their own measurement gear verifying my work. Here is Seymore who writes for AudioExpress:

"If there’s anything in his data that you think he’s hiding, then request the AP project files. When I have, Amir has cheerfully provided them."

Seymore has also tested some of the same units I have with same results. In this case the designer of a phono stage claimed my measurements were wrong so with permission of the owner, I sent the unit to SIY (Seymore):

"As you can see by comparison, our measurements correlate quite closely (though we use opposite numbering for our channels, which I'll blame on my Hebrew education, learning to write from right to left). So I can proceed without worrying about major inconsistencies."

It is also not hard to replicate my measurements after seeing them. Such is the case with Professor "Wolf/L7" in China where he tests domestic products that I also happen to test. Results are almost always in agreement.

I also helped another reviewer (Erin) who had gotten a Klippel NFS measurement system to get up to speed by sending him the very sample I had tested (JBL monitor).

Really, there is nothing to hide here. I put out these measurements with full expectation that they will be scrutinized both on ASR and by manufacturers and other testers. If you have a third-party in mind to verify my results, I am happy to send them one of my tested samples for them to re-test. You pick the place, make it worth their while to do the testing and I will do the rest.

I suggest reading ASR for a while and to better understand what we do as to not ask questions for which there are trivial answers.

|

@thyname

What does that have to do with what I asked. A “member “ can send you a bunch of stuff for various reasons. Does that make you feel happy and accomplished with your life?

Also, if he spent so much money on a cable (assuming not a $20 Ali fake), don’t you think he is NOT your audience?

Sounds like you forgot your elitist argument that only poor people living in their parents home frequent ASR to find cheap Chinese gear. So I post examples of many members sending me a ton of expensive gear indicating they have the means to purchase some. So definitely not poor as you claimed and assumed.

And they are precisely our audience. Many send their gear in after coming from your camp and then watching my videos and reviews. They then wonder if they were taken for a ride or if their expensive gear really performs. I do the testing and then they know. In many cases their reaction is "lesson learned" and no longer follow claims by folks like you on fidelity. Or Joe reviewer on youtube.

And no, none of his cables were "fake." I post the review of his S/PDIF cable from Nordost. You have a reason to claim it is fake? And that the owner of tens of thousands of dollars in DAC electronics would buy fake $20 cables?

|

@nyev

@amir_asr , thank you for sharing your perspectives. I have an education in computer engineering. But being an audiophile, I just don’t agree with the position that the science and measurements can totally explain our perceptions. I have a fundamental belief that science cannot explain all of the dimensions that impact our subjective interpretation of physical sound waves. Why? You have suggested folks get upset when ASR rejects a component that they subjectively praise. I think you are correct in many, many cases. It’s why people get so fired up about ASR. In my case I don’t care if ASR rejects a component that I subjectively enjoy - that doesn’t bother me in the slightest because if I enjoy it that’s all that matters to me. So why do I follow my subjective judgement over science? I simply don’t believe that science can FULLY explain, at our present level of understanding, how sound waves are subjectively interpreted by humans.

First, thank you for kind attitude in asking this question. :) Much appreciated.

On your point, it is very true that we don't understand why we perceive what we perceive. Advancing that knowledge though is domain of neurologists who want to diagnose disease. The science we follow is psychoacoustics which is the "what" we near and don't hear. If needed we do, we do draw from neuroscience but in general, we don't need to.

Example, we know most people can't hear above 20 kHz. The why has to do with the design of ear. But we don't need to know that. We simply conduct controlled tests and find out the highly non-linear frequency response of our hearing. And then we use that to build things like lossy audio codecs (MP3, AAC, etc.) which work remarkably well in fooling people into thinking they are hearing high fidelity sound. Again, we can look at features or of our hearing like IHC, filter banks, etc. but we don't need to, to build a loss codec.

By the same token, we can measure a device's electrical characteristics and then determine if they fall below our threshold of hearing. Once they do, the what is what matters, not the why. We can declare the device transparent.

Now, I should note that listening tests play a huge rule in audio science. Every speaker and headphone I test relies on decades of psychoacoustics and controlled testing to develop the target responses. Again, it doesn't why we are the way we are. In speaker testing for example, we all seem to like a neutral response even though we have no idea how the music was mixed and mastered! We have an interesting compass inside us that says deviations from flat on-axis response is not preferable.

To be sure our hearing is complex. For example there is a feedback loop from the brain to the hearing system to seek out information in a noisy environment. This is the so called "cocktail party effect" where we can hear people talking to use even though there is so much background noise with others talking. The brain dynamically creates filters to get rid of what you don't want to hear, and hear what you want to hear.

This causes problem in audio testing. You listen to product A. Then you go and listen to product B, hoping to find a difference. Your brain obeys your orders and will tune your hearing differently. All of a sudden you hear a darker background. Details become obvious that were not. None of this is a function of device B however. It is happening because you have knowledge of what you are trying to do, and use it to hear things differently in a comparison.

Due to understanding of above, we perform testing blindly. Once you don't know which is which, your brain can't bias the session. Actually it tries but we run enough trials to find out if that is a random thing, or due to actual audible differences.

So as you see, we understand what we need to understand to determine fidelity of audio products. Said products are not magical. They have no intelligence Measurements as such, powerfully tell us what they are doing.

|

|

@thyname

@amir_asr great and dandy but why anyone would pay thousands of dollars for a Mola Mola DAC if a $50 CCP DAC can measure just as good within humans’ audible thresholds of hearing? Aesthetics?

Explain this to me.

Many reasons, independent of sound. They like the support, warranty, good looks, resale value, pride of ownership, features, etc. I replaced my $6000 Mark Levinson DAC with a Topping. Even though the topping is more capable (supports more formats) and has better objective fidelity, I miss the old DAC with its big and beautiful display and enclosure.

It is for the same reason I own a Reel to Reel tape deck. It is not because it has better fidelity or cheap (tapes cost hundreds of dollars!). But because I love looking at its reels and looks.

Net, net, there is nothing wrong in buying high-end gear that costs a lot of money. Just make sure it doesn't sacrifice fidelity to get there. When they do, like the Chord owner before, they will buy the much cheaper and more performant electronics and happy for it.

BTW, there is no $50 DAC that competes with Mola Mola. The highest SINAD ranking DAC in my testing costs US $900. Go ahead and tell me this is for out of work kids with no money. 'cause it sure as heck is not in my book.

But yes, a $9 dongle from Apple often outperorms multi-thousand dollar DACs from high-end companies. Shame on them for shortchanging people for performance while they could have done far, far better. Only if they properly tested their ideas of low noise, distortion, etc. before releasing the boxes to you all.

|

@tcotruvo

In the debate about science vs senses as a way to evaluate audio equipment…

??? Audio science heavily embodies listening tests and so includes our senses. It however does that correctly by testing just what we hear, not what we pick up with other senses.

|

@andy2

Why then SS amp always has a haze where as tube amps always has a transparent sound but we all know tube always have inferior frequency response vs. ss.

In short you can't measure it.

Oh we can and do. Measurements say that they don't have haze. And proper listening tests confirm the same. That you think otherwise means you are in dire need of performing a controlled test where you don't know which is which. Otherwise, your bias as stated above will always give you what you want to hear, pun intended.

In my testing of many tube amps, they either have low enough distortion to sound just like solid state devices. Or, they have sufficient distortion to sound muddy at lower volumes and then get quite distorted. No way are they transparent in any form or fashion. Now, if you are not good at hearing such non-linear impairments, then and you operate under the above bias, then your conclusion will be what you stated.

See, all explainable.

|

@thyname

How can you know? Objective proof? Check Ali Express. Available to your business friendly US market place. Ali express. App. There is a thread here in Audiogon. Did you send your Nordost cable to the company for authentication?

How do I know you are a real human being? Maybe you are the output of AI/ChatGPT. Can you prove you are not?

Alternatively you could be a shill for high-end audio companies. Can you prove you are not?

As to the cable, company could have reached out to me stating I tested a clone. Why don't you write to them and ask. Until then, I am pretty sure of what I tested as I trust the owner saying he bought all that expensive cables on the insistence of the people who sold him his Chord DAC.

You guys are really running on empty to be making these arguments....

|

@mastering92

@amir_asr

mp3s are not great. Sure, you could fool someone in to thinking that 2 files are the same on a smartphone over bluetooth, but upon further inspection; in a more resolving system, you could tell the original .wav file and .mp3 file apart easily, no matter what the kbps was, even 320 kbps.

Self-bragging is common when it comes to lossy compression. Problem is, when most of you are put to blind tests, you flunk being able to tell the source from the compressed one. And no, resolving system has nothing to do with it. The fact that you say that tells me you don't know what it takes to hear such differences. As a trained listener in this domain, I can tell differences with just about any headphone on any system.

While it is true that MP3 was not designed to be transparent, at high bitrates, especially at 320 kbps, it easily fools even the most ardent audiophiles. I know because we have tested them. While at Microsoft, I told my signal processing manager to recruit the large body of audiophiles we had there for testing our lossy codec. We ran a large scale test among our self-selected audiophile group. Results were embarrassing for me as an audiophile. None could remotely match our trained but non-audiophile listeners.

To hear those impairments, you need to learn to hear them. It does not come naturally to audiophiles. This learning also involves understanding of the algorithms and where the weak points may be.

I have lost count how many times a presenter at an audio show has whispered to me that they were playing lossy audio to audiophiles who had no idea, thinking they were uncompressed content!

You may be the exception -- there is a small percentage of audiophiles who are good at this. To prove that, you need to provide results of a double blind test to show that and not just claim it. Here is an example of me passing such test:

foo_abx 2.0 beta 4 report

foobar2000 v1.3.5

2015-01-05 20:26:27

File A: On_The_Street_Where_You_Live_A2.mp3

SHA1: 21f894d14e89d7176732d1bd4170e4aa39d289a3

File B: On_The_Street_Where_You_Live_A2.wav

SHA1: 3f060f9eb94eb20fc673987c631e6c57c8e7892f

Output:

DS : Primary Sound Driver

20:26:27 : Test started.

20:27:01 : 01/01

20:27:09 : 02/02

20:27:16 : 03/03

20:27:22 : 04/04

20:27:28 : 05/05

20:27:34 : 06/06

20:27:40 : 06/07

20:27:51 : 07/08

20:28:01 : 08/09

20:28:09 : 09/10

20:28:09 : Test finished.

----------

Total: 9/10

Probability that you were guessing: 1.1%

-- signature --

7a3d0c1aaaf8321306ff6cfdd1f91ff68f828a54

So please don't make such assertions unless you have evidence to back it. Fish stories in audio are quite common. Reliable facts, not so much....

|

@jayctoy

If I remember Robert Harley was warned by either Mike Moffat or Jason from Schiit audio that yagdrassil dac don’t have good measurements ? It turned out the yagdrassil got a stellar review from Robert Harley.

An outcome which he hands to any product that costs money. Why would that in any form or fashion mean anything in the context of measurements being reliable or not? I only recommend 1/3 of all products I review. What is that percentage for RH? 99%? 100%

BTW, Schiit publishes measurements of everything they build now. As noted, this came from work that we did at ASR, highlighting the value of such things. So I would not pick this as an example of anything.

|

@nyev

All this to say, that sometimes, maybe a lesser performing component may sound better given lesser surrounding components in one’s system. Which again, judging a piece by measurements would never provide any useful context in these situations.

Thank you for another measured response. On this point, we never foreclose such possibility. All we ask that evidence of sonic superiority come in the form of only auditory senses. And have statistical rigor to be reliable. Should this evidence come about, we certainly will throw out the current measurements and investigate further what is going on.

What we face unfortunately is so and so says it sounds good. Well, I can get you people who say the opposite. And neither can be shown to be reliable. Someone mentioned Robert Harley. He raved about my Mark Levinson amplifiers. Stereophile editors hated it. To their credit, they showed some measurement issues although that was not normative with respect to comments from subjective reviewer (which no doubt was biased against class D amps).

So what we ask is simple: please conduct a test where all factors have been removed other than sonic fidelity of the two devices. Match levels. Play the same content on the same system. And repat at least 10 times and see if you can get 8 out of 10 right. It is not much to ask for as I perform many blind tests to add back up to my arguments. If that is too much, and it can be, then we better run with a) measurements of the device and b) science and engineering behind how the device works and why something would or would not be audible.

I should note that when it comes to transducers, are measurements are less predictive and I have in a number of occasions liked something that didn't measure well. Wilson Tunetot speaker review was one such speaker. It was an expensive bookshelf speaker ($12K), so would have been easy to go with the flow of bad measurements and expensive so let's damn it. But I could not in my listening tests and reported that. Got heat for it from my own crowd but so be it.

|

@andy2

That is because a good tube amp will cost a lot more money compared to a SS amp. To get the same performance you need to spend quite a bit more. If money is not an issue, most people would go with tube.

Back to the myth that money buys performance. It can, but you only know it if you measure.

That aside, almost all content you listen to was created and approved by the talent using solid state electronics. Is your claim that they heard it with haze? If so, that haze must be part of the experience they want you to have! Best to leave it just like guitar distortion. :)

|

@thespeakerdude

I think the test you gave yourself is too easy 😀 Your point is well taken in regards to trained listeners and MP3. I think a better test is to serve up 10 different tracks which may be MP3 or may be wave, and then test how well listeners do at accurately assessing if the track is compressed or not. I wonder if even the trained listeners will be challenged in that case without a reference.

I didn't give that test to myself. I was challenged on a major forum by an objectivist to be able to tell MP3 from original with him claiming that no one could. At the same time, there had been a challenged on that forum to tell 16 bit content from 24 bit. Content for that was produced by AIX records which is well known for quality of its productions. So to remove any appearance of bias in selection of material, I grabbed the clips from that test and compressed them to MP3. And post those results. The clip was not at all "a codec killer" where such differences are easier to hear.

On the type of test you mention, I am not a fan of them for the reason you mention. It is harder to identify the original vs compressed that way because you have to now know what the algorithm does to create or hide sounds. In other words, is an artifact part of the original content or was it removed.

Our goal with listening tests should always be to try and find differences, not make it hard for people to find what is there. Because once we know an artifact exists, we can fix it. Making the test harder to pass goes counter to that.

That said, I and many others were challenged to such a test on the same major site above. We were given a handful of clips and asked to find which is which. Results were privately shared with the test conductor. When I shared my outcome, he told me I did not do all that well! I was surprised as I was sure two of the clips were identical and thought that was put in there as a control.

Fast forward to when the results are published and wouldn't you know it, I was "wrong." We had a regular member with huge reputation for mixing soundtracks for major films and he got it "right." Puzzled, I performed a binary comparison and showed that the two files were identical! Test conductor was shocked. He went and checked and found out that he had uploaded the same file twice! He declared the test faulty and that was that.

Despite that, as you saw, I will repeat again, I don't want to make blind tests too hard on purpose. We need to be interested as much in positive outcomes as negative.

|

@andy2

Amir has claimed that he personally listened to "200" pieces of equipment per years. That is around 366/200 or 1.5 days per equipment. I mean come on who in the world can take you seriously if you only spend such a short time evaluating an equipment.

In comparison, people at Stereophile spend weeks on an particular equipment before they publish the review.

They can spend months and it wouldn't make their reviews reliable. If you know what you are doing, including science and engineering of the gear and what the measurements show, you can zoom in and find issues. You don't sit there listen to random track after random music for weeks. That tells you nothing.

Every one of my listening tests uses the same, revealing and proper tracks. I focus on what measurements say is wrong with the unit and test level of audibility. A headphone amp that has too little power with high impedance gets tested that way. And with content designed to find audible issues.

Same gear is tested by one of your favorite reviewers reads like a music review. Oh listen to this album and that album. What? I want to know what the equipment is doing, not what music you listen to.

Proper research has been performed to find such tracks. See this:

You need to stop listening to your lay intuition and embrace science of how to do such evaluations correctly. Formal testing shows long term listening to be much less revealing than instantaneous ones. See this published research on that:

I implore to you start paying attention to decades of research on what it takes to properly evaluate audio gear. The lay understanding and intuition stuff needs to go out the window.

|

|

@invalid

This is a subjective hobby after all, isn’t it, or do you guys just sit around and look at charts, graphs and oscilloscopes. I enjoy the music more because I don’t worry about how my equipment measures.

No, you worry about a ton of things in your system that don't matter while we enjoy music. You think your wires may have sound. You think your amp has sound. You think the table you put the system on has sound. You think your AC has sound. You think digital sources have sound. You think, well, you get the point.

We on the other hand, buy performant systems with confidence and sit back and enjoy it. We know why it sounds right. You don't. You are forever chasing ghosts in audio. The anxiety that comes with that must be immense.

Ask anyone who has converted from your camp and above is the answer they give you. While you keep upgrading, tweaking, replacing stuff to remove that other "veil" and get blacker backgrounds, we queue up another track to enjoy.

So I suggest getting off that talking point. That dog don't hunt....

|

@alexatpos

Well, I am very curious to hear any system that was assembled through blind testing of its individual components....

You could do that. Or, if you are in our camp, use measurements to rule out audibility in many components (i.e. they are transparent). For others such as speakers, you can rely on companies that perform double blind tests, or use research that correlates what sounds good to us with respect to measurements. I have done this across some 200+ speakers now. The research works wonderfully. Same mostly works for headphones as well although measurements there are subject to more variations than speakers.

Remember, the job here is not to give you 100% answer. It is to get rid of 90% of the variability by weeding out clearly broken and non-performant gear. The rest you can choose from and take in factors beyond performance.

Compare that to the alternative the few of you follow. Completely unreliable listening tests. 1000 and one opinion about every gear, every cable, everything you can name. True wild west with zero regard for decades of research into what makes sense.

|

@mastering92

I’m sure that most of these people doing reviews have a standard set of reference tracks; or at least a background/strong interest in audio; enough so to make their impressions reliable.

You are a fountain of untrue assumptions. Do you even bother to fact check anything before just throwing it out at us? Didn't I already show you how proper, peer reviewed research shows audio reviewers to not be remotely capable of producing reliable assessment of speakers? Here is Dr. Olive's research again:

Do you see how Audio Reviewers are even worse than audio sales people? If they are reliable as you say, how come they failed so catastrophically here?

They failed because they don't have trained ears despite all the gear they have listened to.

That you declare them to be reliable means that you have not spent any time reading and understanding this topic. You continue to shoot from the hip, throwing claim after claim without an ounce of proof. All lay intuition meant to defend your position in audio. Spend less time here and more time reading and learning about the topic. Here is Dr. Olive's blog if you don't know where to get the research papers:

Or watch my video where I go through this and explain it in understandable manner.

|

@alexatpos

In the same time and please dont take this personally, I am surprised that there are people pretentious enough, who are trying to convince others that their choices are the ’right ones’. But, than, why stop only on hi fi? I am sure that there are more interesting challanges, or more noble ones?

Your surprise should be directed at whoever created this thread, directly challenging what we do at ASR, and manufacturing comments about me and what we do to boot. And those of you flagging me personally, causing the form software to send me a notification. I should ignore it but then folks go on and on here, piling on falsehood on top of falsehood. A fellow here even went after my personal career!

As to your car example, no, it doesn't apply. There is a ton of scrutiny of car performance through measurements such as 0 to 60, breaking, cornering, etc. from press and online reviewers. As such, a car company can't claim that it has built the fastest car in the world where in reality, it is slower than a Honda Accord. Yet, that is what happens in audio. Until we came about, objective evaluation of audio was delegated to an appendix at the end of a few reviewers and that was it. Yet, every audio company claimed to recreate reality of live music, etc.

We get started and shine a light on equipment performance and folks are up in arm. How dare you do this and give audiophiles more information? As you say, demand is made to "leave them alone!' Personally, I do leave them alone. We have a great audiophile society locally and you don't see me going there trying to change the opinion of many subjectivists there. Thankfully many come to me at meetings and ask me questions.

So please don't pull that stunt and debating tactic at me. The reality is that you are bothered by what we do so you want it stopped. Well, it can't be stopped. People are seeing the value of reliable information about audio and are abandoning what some of you have taught them. Not everyone of course. But we are not running a sprint but a marathon. And not trying to boil the ocean....

|

@nyev

I know the argument remains unresolved - are these things in my mind, or is it that science has not yet figured out how to measure certain things that we perceive in audio?

We can't measure what you perceive. You listen to a track that brings you joy. We can't measure joy. What we can measure is the sound coming out of your audio gear. That is the sound that is "heard." What is perceived includes many other variables that go beyond sound. I enjoy watching my Reel to Reel play music. It brings me joy. But that has nothing to do with the sound. The sound is excellent but I hear background noise which is not so nice -- something we absolutely measure.

Put more strongly, you have to identify what is sound and only sound in your perception. You can't look at something, and give an opinion because your knowledge of what you are seeing pollutes your perception. A person not likely horns will dislike any horn speaker. Put them in a blind test though, and they won't bring such preconception to the party. To wit, I was shocked how good these JBL horn speakers sounded in double blind tests at Harman that I took:

So bottom line, bring us an experiment where only the sound is evaluated and shown to not be random outcome and I will show you a measurement for it. The moment you include other things, we can't measure it due to no fault of science, measurements or engineering.

And oh, there is no science being developed to determine what you state. The science is completely settled that only results of controlled audio tests matter. where only your ear is involved. All else is not worth even looking at.

|

@nyev

Below are three examples where I experienced subjective differences in sound and I genuinely wonder if you would be able to measure the differences I was perceiving.

That would be jumping a step. As I explained, first it has to be established that what you are perceiving is sound and only sound. To do that, you need to run those tests blind and repeat 10 times and see if at least 8 out of 10 times you can tell which cable is which. Bring that to me and I will guarantee you that I can measure that effect.

I just reviewed three JPS Labs cables (XLR, USB and Power). I performed listening tests on all three. All sounded different than my generic cables. Measurements did not show any evidence of those differences. Why? Because my testing was unreliable, ad-hoc subjective tests. These tests produce all kinds of outcome for me as they do for you. The problem as I keep saying is that our perception is so variable that it doesn't lend to reliable conclusions of fidelity differences until we put in some controls.

|

@andy2

That is true in theory but I don’t think Amir even doing that. But jitter is difficult to test in frequency domain, just to name a few. There are more.

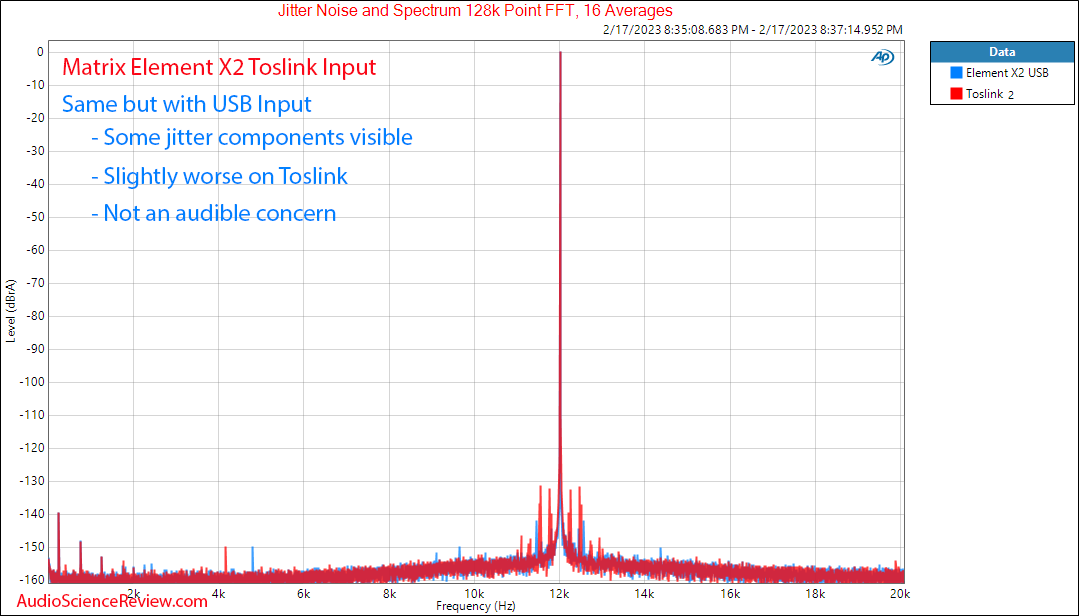

We don't "measure" jitter in time or frequency domain. Jitter modulates the primary tone that we can play. We perform a spectrum analysis of that tone and jitter sidebands jump right out:

See those sidebands at whopping -130 dB. We can easily measure them by simply sampling the analog output of the DAC. No need to probe inside which is problematic anyway as the DAC chip likely has jitter reduction.

As a major bonus, we can apply psychoacoustics analysis to the jitter spectrum and determine audibility as you see noted on the graph.

The other thing is that jitter is not one number as is often talked about. Above you see multiple jitter sources at different frequencies and levels. This makes FFT analysis far superior to any time domain jitter measurement that spites out either a number or even a distribution.

FYI, my audio analyzer has a time domain jitter meter but it is nowhere near as good as the above spectrum and at any rate, only works on digital sources, not analog.

|

@tsacremento

@amir_asr, I have a few questions regarding measuring equipment. Genuine questions, not “poking the bear” nonsense.

Appreciate the constructive tone. :)

- Can unit-to-unit variations significantly affect measurement outcomes?

I don't think so. These devices are not mechanical as to require assembly line alignments and such. We (I and membership) have done some spot checks have confirmed that random units purchased matches my test results. There have been a few exceptions. I once tested a Schiit pre-amp (?) where one channel had 10 dB lower SINAD than another. Schiit reached out to me and offered another unit without that deviation. I guess in a perfect world we would buy another unit on our dime and re-test. But I took Schiit's word that the unit selected was picked at random.

This concern used to come up a lot in the past. When it heated up a lot, I had Topping reach out to me saying they would pay for me to buy every product of theirs I had ever tested to see if the performance was different. I have a lot of respect for their ethics in this regard and chose not to do that. But their offer stands.

As to part variations, yes, this exists. Fortunately for most components, the actual value is not critical. A 1000 microfarad power supply filter will do its job whether it is 20% lower or higher in value. In a few places, this matters a lot and there, companies pick high precision 1% parts and such. In addition, we have design techniques such as feedback which eliminate a lot of variations from output of the audio device.

Finally, there are other people making measurements similar to mine now. A fellow in China who goes by the alias L7Wofl for example, reviews chinese gear, testing other samples than me, with results that correlate excellently with mine.

Net, net, I don't think this is a factor to be worried about especially in the context of large variations in offerings from different companies. Other answers below.

|

@tsacremento

- Does a unit’s chain-of custody or provenance impact your confidence in the results of a unit’s test results being truly representative of the model?

For the vast majority of cases, no. Most of the reasoning was explained in my last response. Let's remember that if there is an issue with Golden samples, it would affect other reviewers far more than me because they exclusively get samples from manufacturers. In my case, a large number of products for test come from members. A good portion of these are purchased new and drop shipped to me. And large percentage of used ones are current products. I occasionally test vintage products or discontinued ones because they are available on the cheap on used market. Manufacturers are welcome to challenge the results of any used product tested but I have yet to encounter one.

|

@tsacremento

- Will the difference between a 90 dB S/N component and a 120 dB S/N component be audible in a typical listening room with a 30 dB background noise level?

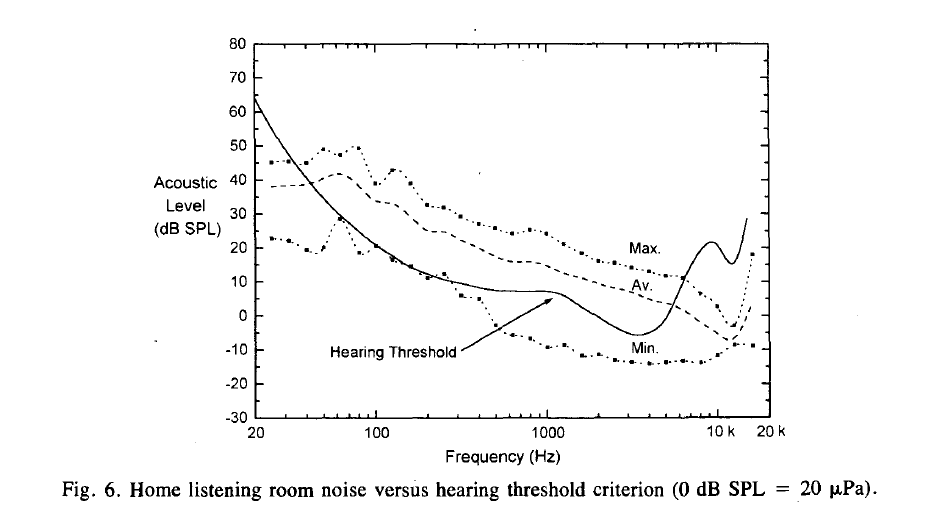

This is a misconception. I actually published a paper on this a few years back based on peer review journal article and research at AES. Our hearing sensitively is highly non-linear. 60 dB at 20 Hz will be silent to us where as -5 dBSPL is audible at 3 kHz! So to know if room noise is an audible barrier, you have to perform a translation and then superposition it on top of our hearing sensitivity. This work was done based on survey of listening rooms and here are the results as I post in my article:

As you see, room noise drops exponentially with increasing frequencies. This is because it is so much easier to block high frequency noise. Low frequency noise on the other the hand, easily travels through walls and even concrete! Your meter will be happy to pick up noise from freeway from miles away, yet your ear will not.

As you see in the above graph, we can build rooms that are completely silent even though they could have bass noise 30 dB.

Using this research, we see that if we want to have reference quality maximum loudness (measured at 120 or so dB in live unamplified concerts), we need about 20 bits of dynamic range or 120 dB. Put inversely, ideally you want your system to have a noise floor of -120 dB.

Now if you are listening in a living room, then such constraints can be lowered fair bit. But why? You can buy a $100 DAC that performs this well, once again proving that achieving full transparency in electronics doesn't cost much money. Just requires careful shopping and not following marketing statements of fidelity but reliable measurements.

|

|

@tsacremento

- How audible will the difference between an electronic component producing 0.1% THD and one producing 0.001% THD be when played through a transducer generating between 1.0% and 2.0% THD?

The nature of distortion is different in electronics vs speakers. For example, speakers have no self-noise (passive ones anyway) whereas electronics do. This is why you can put your ear next to a tweeter and hear hiss and buzz.

On pure distortion front, speakers produce their most distortion in bass region where we are not critical anyway. An amplifier distorting will do so at all frequencies. Have an amp clip and you can hear it on any speaker even though it may not reach the bass distortion of said speaker.

There are stated of the art speakers and headphones that have clocked 80+ dB SINAD which is the limit of what I can measure for them.

That said, detecting non-linear distortion is not easy for most listeners. So you could say that maybe people can't hear even elevated distortions 1 to 2%.

Here is the thing though: the only reason a piece of electronic generates 0.1% THD is due to sloppy or bad ideas in design. It is almost never the case that it is done to make the equipment cheaper. Indeed, by far, the situation is the reverse: you pay far more money for a gear with more distortion and noise! You pay more to get more noise and distortion. Yet you can buy a device from companies that care that have provably inaudible noise and distortion for very reasonable cost. We learn about this by measuring. If we didn't, we would be going by marketing words of expensive gears and not objective, reliable data.

|

@alexatpos

Sorry, but these are all great news. First, you have listened something, than, there are differences, and most important, the cheapest cable was the ’best’. Does it mean that we all should buy generic cables?

You should but not because of my listening tests. Because we as engineers and people who understand how audio products work, and decades of research into what kind of listening test is valid and what is not, point to generic cables performing their function way beyond call of duty.

Think about it. The cable is most harmless item in your audio gear. A power cable has bandwidth way, way beyond what it needs to convey mains power. It has no distortion of its own. It is as pure as it can get relative to your electronics and transducers. Yet folks focus on them and spend thousands of dollars on them. Why? Simple: they don't know how to perform an unbiased audio listening test. I showed you how folks testing Pianos do that. Talent shows routinely use blind testing. So do people who test foods. Do they all belong to a cult? Really?

So no, don't go wasting money on premium cables because you think they sound better. My testing actually shows in some cases that their cables are actually worse when it comes to noise! Fortunately we are all too deaf to hear those artifacts but we can prove that company claims are just wrong.

|

@alexatpos

@prof I think we may have very different view on how science works, If something cant be 'scientifically' proven and yet, 'existst' (at least by testimonials of so many) than perhaps 'the scinece' (or better the people who claim that they are 'scientists') should try to find new methods or tools to examine those 'events'.

If you say aliens land in your backyard every night, you don't get to claim that we need better radars to detect their arrival. You need to first prove what you claim to be there, really is. Science has provided that mechanism for that. It is called controlled testing where the only variable is sound. You involve many other factors and senses and then ask that science go and prove based on sound alone, that what you heard is real? You have to be joking.

There is currently no research going on to validate what you all claim to hear. None. Why? Because you have not provided any evidence of something real. Do that and science will happily investigate. Stick to your biased testing and we know why you arrive and wrong conclusions. We don't need to advance the science any more. We have known for decades that people say they hear things sighted that vanish when tested blind. And that is that.

Put more directly, you need to advance your testing methods. Science is years and years ahead of you. To the extent you have no use for such science, then science doesn't owe you more work. It certainly doesn't need to spend money chasing people's imagined effects.

|

@tsacremento

From reading through many test results on the ASR website, it appears to me that achieving ultra-low SINAD performance is almost trivial for good line-level electronics, but not so much for power amps. Am I not interpreting the test results correctly, or is this true? If true, is this issue due to the amount of gain required?

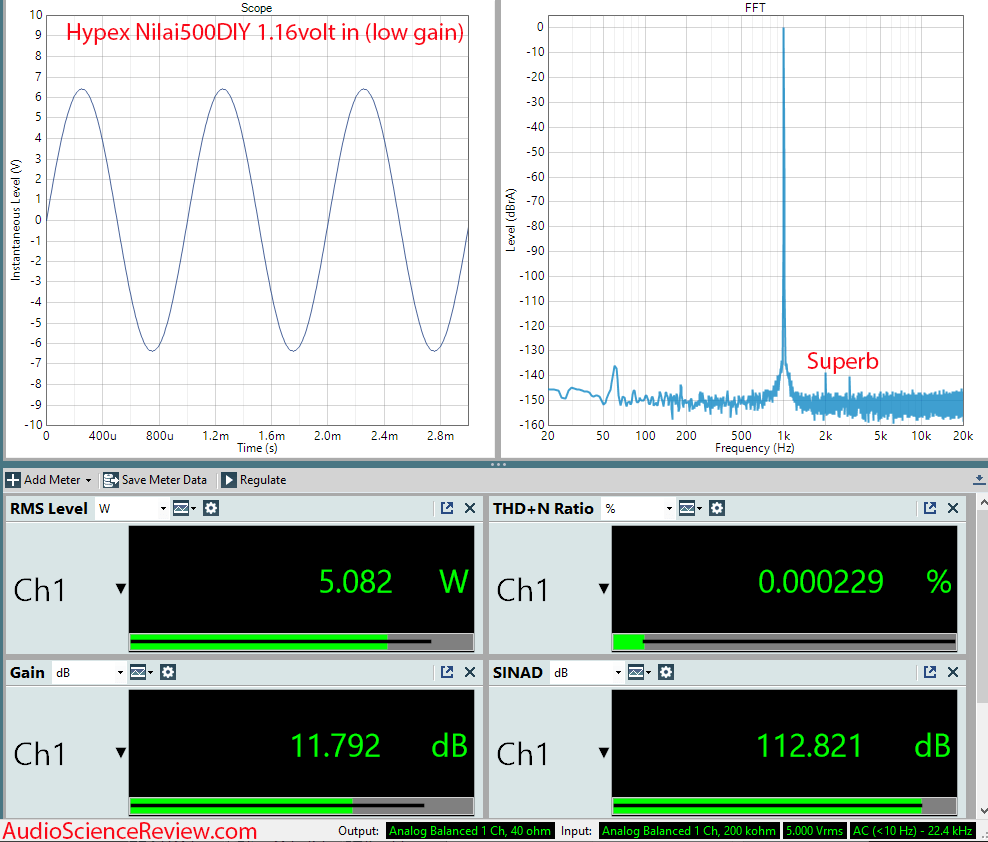

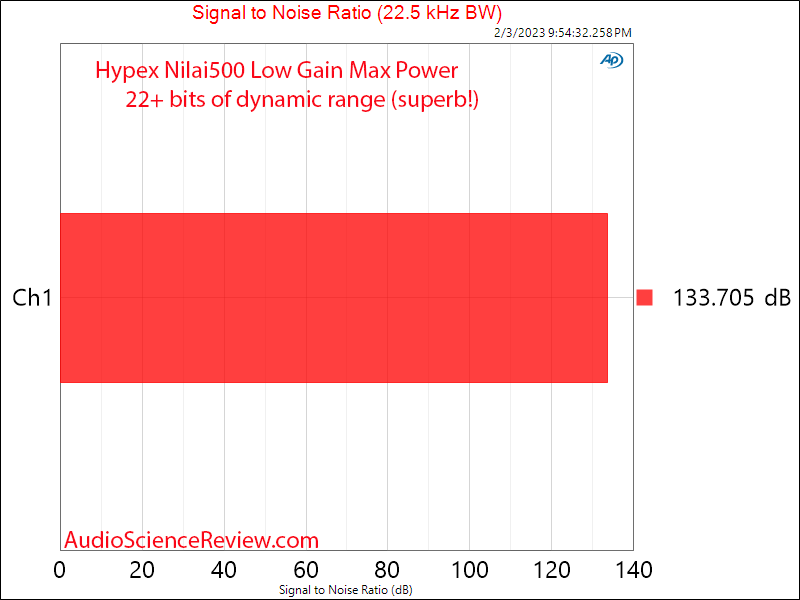

As a general rule, it is easier to achieve great performance where heat, and large amount of current and voltage is not involved. Fortunately, amplifiers are finally catching up. High-performance class D and a few class AB amplifiers have come very close to performance of state of the art DACs and achieved assured transparency. Look at the vanishingly low distortion in this new Hypex amplifier:

And that is at 5 watts. Increase the power output and SNR shoots way up with it:

There is a premium for this level of performance but nothing remotely like what high-end companies charge for far lower performance.

|

@cleeds

More ad hominem, appeal to authority, and bandwagon fallacies from a measurementalist. As for the "chasing ferries" remark - you’re getting more and more colorful. Perhaps science is not your calling.

I am not a scientist. I follow science. You disdain the profession. What do you expect to be called?

And I am not a measurementalist. I am all about what I can prove, not what I can fantasize that can easily be disproven. Measurements are repeatable and provide valuable insight. Why else would you hate them? You must feel the mist of audio science brushing against your face.

As I keep saying, and evidenced by numerous reviews, I perform far more listening tests than all of you do, combined. But I do so in ways that are defensible, not catering to marketing claims of companies and acting like their PR agencies as you are.

Really, in just about every post I provide evidence. Do you just believe in power of words to overcome facts?

|