Maggies sound terrible according to the measurements.

I assume you mean Magnepan LRS and not the entire line as I have only reviewed that. Assuming you do mean the LRS, there were clear audible issues as well to my ears:

Subjective Speaker Listening Tests

I first positioned the panel right at me and started to play. What I heard sounded like it was coming from a deep well! I then dropped the little rings on the stand and repositioned the speaker as you see in the picture (less toed in). That made a big difference and for a few clips I enjoyed decent sound. Then I played something with bass and it was as if the speaker was drowned under water again. It wasn't just absence of deep bass but rather, quietness on top of that.

Even when the speaker sounded "good" you would hear these spatial and level shifts that was really strange. As the singers voice changed tonality, it would sometimes shift left and right. And change in level no doubt due to uneven frequency response. There was also some strange extended tail to some high frequency notes that would seem to go on forever.

Just when I thought I had the speaker dialed in, I leaned back some and the tonality got destroyed. You had to sit in the proverbial vice around your head to get the "right" sound out of LRS.

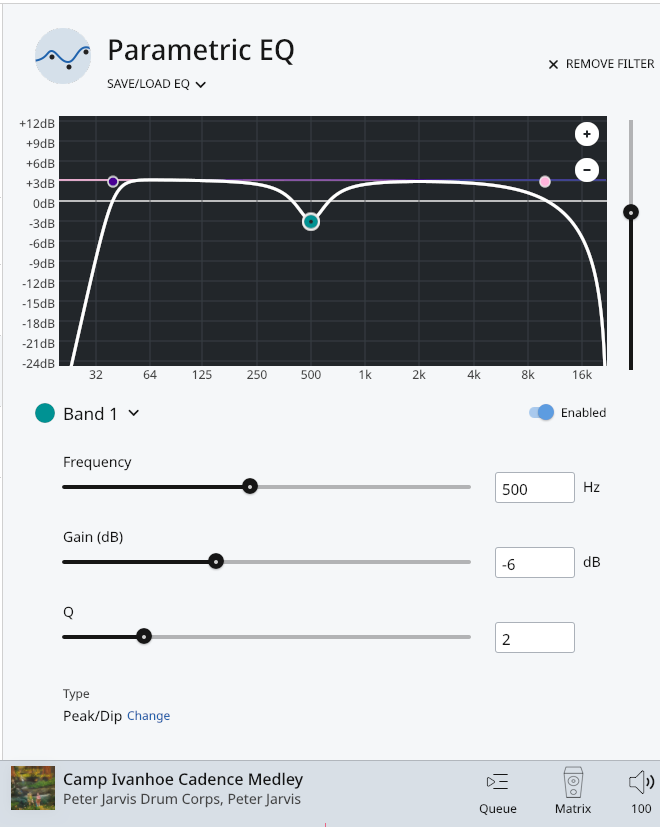

I applied a quick and dirty inverse fix to the response to get some semblance of neutrality:

The one PEQ shown, combined with an overall lift of the entire response made a huge difference. Speaker was no longer dull, lacking both bass and treble. Alas, after listening some, the highs got to me so I put in the right filter to fix that. And while the LRS could handle the boost in low frequencies well, bringing for the first time some tactile feedback, it did start to bottom out so I had to put that sharp filter for extreme lows.

Once there, I was kind of happy until I played the soundtrack you see at the bottom. Man did it sound horrid. Bland and some of the worse bass I have heard.

When it did sound good -- which was on typical show audiophile tracks -- the experience was good. Alas, every track would sound similar with the same height and spatial effects.

This is a highly specialized speaker that has poor general purpose. People fall in love with its spatial qualities due to dipole design and tall image it provides. So not surprised you speak as if it is perfect. But perfect it is not. Not remotely so.

|

And cheap Topping DACs sound great because they measure that way.

Oh, tens of thousands of your fellow audiophiles disagree with you. We all use them and enjoy the incredible transparency they bring to our music enjoyment.

And as you acknowledge, the are available at ridiculously low cost compared to what high-end companies charge for their DACs that are often distortion and noise factories.

|

I see Amir has created a thread on ASR that there is a 25% off deal on Revel Be products.

https://www.audiosciencereview.com/forum/index.php?threads/revel-be-series-discounted-by-25.46219/

And what is one brand Amir’s company - Madrona Digital - sells? You guessed it! Revel products

I post that since membership has interest in the line and wants to know about the discount. There was zero call to action to come buy anything from Madrona whose business is anything but selling retail ( we have no showroom, no stock, nothing). I was also clear that people need to buy from their local dealers:

ta240 said:

I know they will definitely order them for me and maybe even with less overhead give me a good deal. But I like looking/listening to things in person before I buy.

I hope anyone who does that, proceeds and buys it from the same dealer. It would be quite improper to then go and buy it discounted online or whatever.

Occasionally someone contacts me to get Revel speakers for them at a discount. Half the time they go and get it from someone else after that which is fine with me. I provide this option for people but at no time advertise it or promote it on the forum. If I really wanted to sell something, I would have put a link on top saying come here to buy this and that. But you see none of that whatsoever.

These arguments have no legs anyway. The last thing I want to do is to be an audio salesman. So I suggest not projecting. I am not you. I am not motivated by a few dollars like this as to put in doubt the ethics of what I do.

|

Your target audience is not Galen’s market anyways.

What is that target? The ones that don't need any evidence from the manufacturer that said cable costing 10X more makes a difference in sound coming out of your gear?

|

Care to elaborate: what is the “average audiophile”?

In this case someone without engineering background to understand why Ethan has to turn a pot every time he changes cables on his null tester. Or why there is residual noise "if there is no difference." Do you fall in this category?

|

Believe it or not, I read your “review” of the Iconoclast cables, and the subsequent exchange of you with Galen. Which I am certain you cut him off. No fuss, your site your rules.

I more than welcome manufacturers to comment. You are out of line anyway as Galen never joined the forum to be cut off. He emailed me his response and I post it verbatim in the review thread. There was nothing else from him to post. Go and ask him and then make claims like this.

|

Sure! What low-end cables do YOU recommend?

For USB-A to -B I recommend Amazon Basics. They are cheap but flexible and have been ultra reliable. I am able to get state of the art measurements using them.

For RCA cables, I also like the 6 foot Amazon cable as a bargain choice. But there are many others such as WBC. I avoid the super generic ones as the connectors are so thin that the get loose after a bit.

For power cables, I use what comes with my gear. I do have a $99 Audioquest one that I have started to use recently because it is flexible and has a lower resistance than some of the thin AC cords. It hasn't made any difference in any measurement (or sound) but I have it so I use it.

For XLR cables, I use Mogami Gold. They are a bit pricey but incredibly reliable. I have bought generic stuff and while they have identical performance, after a few plug/unplug, their solder connections often break.

For speaker cables, I use whatever. For testing though, I like a flexible one so use high-strand count silicone cables. These things are superb in how flexible they are (even when cold) and their strands are wonderful to work with whether you crimp or solder. They are relatively expensive though so I don't bother to use them for long runs of speaker cables.

S/PDIF cables can make a measurable difference due to impedance mismatches but nothing remotely close to audible. It is best to get an impedance matched one if you just want that comfort.

For optical cable, I think the one I have I bought years ago from Monster (?).

HDMI cables cables can be tricky at higher speeds (>4K). Try to keep them as short as possible. But for audio, they make no difference.

BTW, I have a few high-end cables from Transparent, Monster, etc. which I don't use.

I think this is it.

|

@amir_asr : On cables. Thank you for answering my question. Case closed. People can figure out for themselves

My pleasure. And many have... sold their fancy cables, switched to generic and happier for it. More money for music and other good things in life. You are really underestimating the difference we have made in the mindset of audiophiles with these comprehensive reviews of such tweaks. Folks are learning and it is accelerating, knock on wood.

|

And again, you measured the speakers first and THEN listened?

Putting the cart before the horse and insuring your measurement philosophy bias.

Nope. I don't trust any subjective review that is not grounded on measurements. They would be expressing random views in totally uncontrolled situation. I don't see my role as being yet another subjective reviewer to give you such an opinion. You can get that from myriad of other sources.

I listen for a specific purpose: to verify what measurements show as far as audibility. Measurements may show a dip between 1 to 3 kHz. How audible is that? I put in a reverse filter to compensate. Then I perform AB tests, blind if needed and assess that. Many times I find that measurements are correct in that regard. That the sound does get better. Such was the case with Magnepan LRS. Sometimes the correction doesn't help in which case I remark that in the review and search for explanation.

A bonus outcome of the above is that you get a set of filters anyone can apply to the same speaker. When doing so they can opine if the sound got better, or not. This feedback is frequently shared and in many cases it is positive. Indeed many people send me speakers/headphones just so that I create such a filter for it!

The above has worked so effectively that I now give dual review ratings for headphones: one as is and one with EQ correction:

Rating on the left says "fair." Rating on the right says "superb." Here are the listening test results post measurement:

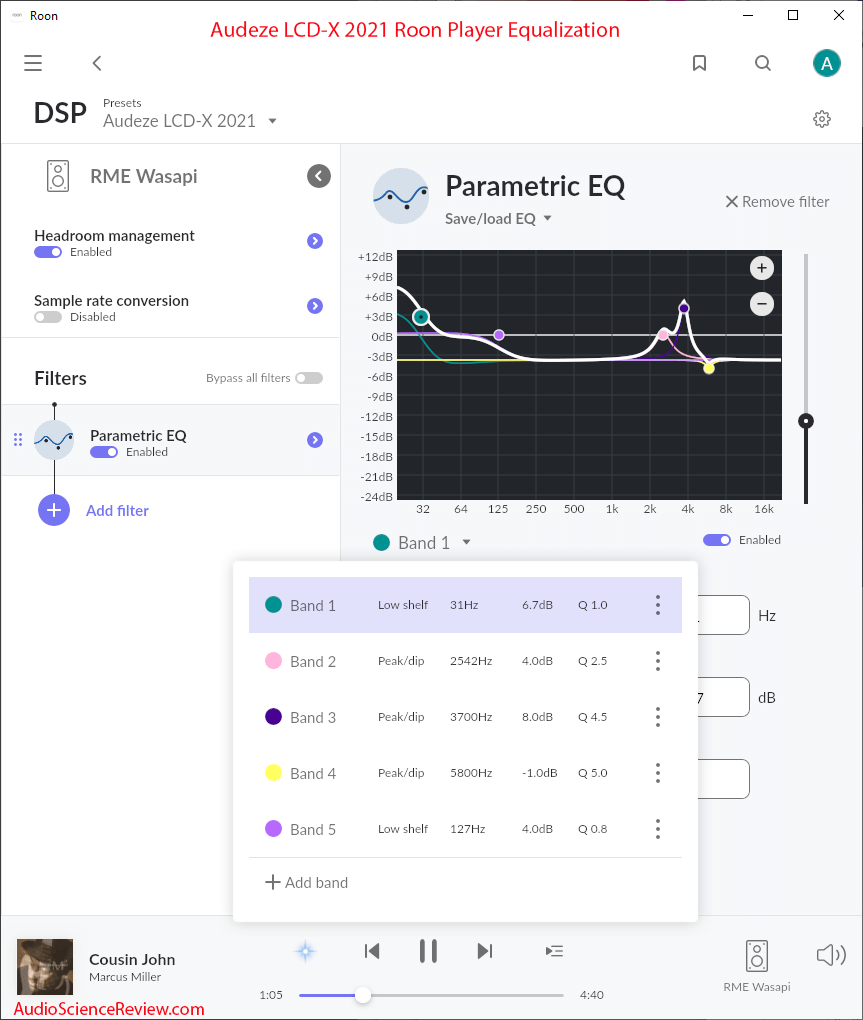

Audeze LCD-X Headphone Listening Tests and Equalization

The sound out of box is quite boring and bland. So equalization tool came right out:

I used dual filters to try to better shape the low frequency boost as it has a complex shape. I then used another pair to fill in the hole in 3 to 5 kHz. The final filter in yellow at 5.8 kHz is just a "stopper." I use a bit of negative gain to make sure there is no boost at that point and farther in frequency response. It helped keep the headphone from sounding too bright post EQ.

Once there, these headphones were a delight to listen to. The sound is now light and airy with really good spatial qualities. Dynamic ability is excellent letting you listen at any level with no hint of distortion. The sound is so nice that a day later, I am listening to them as I type this review.

[...]

I am happy to strongly recommend the Audeze LCD-X 2021 revision with equalization. Without it, it is a pass for me.

See how methodical the process is? It is not just a word salad with a bunch of unrelated talk about this and that album I used to listen with.

I explain all of this in one of my videos and proof points of why the type of subjective reviewing you ask for generates totally unreliable results:

https://youtu.be/_2cu7GGQZ1A

|

Edit: As I said earlier, I don’t begrudge anyone making money as long as they are honest with their audience (or in Amir’s case, honest with themselves). Amir will say he makes a comment on all his reviews that his company sells Revel and that’s all the disclaimer he needs. I agree. But he chastises every single other reviewer who does essentially the same (i.e., advertising, YouTube monetization, affiliate links which are labeled clearly, etc).

This is wrong on multiple fronts:

1. I don't chastise youtubers for making money. I am an avid viewer of youtube product reviews which without exception are monetized multiple ways as you state.

2. I never put any link or other information for people to buy any product from me or my company. I don't even say what my company does unless asked.

3. Every review immediately starts with the source of the product: member loan, my own purchase or company. Almost no other audio reviewer I know does this.

4. ASR has incredible potential for monetization given our massive traffic and number of products I review. But I will not go there. I don't need the money and certainly don't need to have it cloud the transparency of the reviews.

A couple of days ago a company offered me money to get their gear tested sooner and I told them NO!

5. As a policy and core principle, we will NOT allow our people to try to monetize our traffic/membership for their own interest. This is a universal rule that is heavily enforced by other forums. We are actually more lenient to a fault here. Go ahead and spam this forum with sponsored links and see how long you last.

Bottom line, you are completely out of line with your comparison.

|

|

Edit: As I said earlier, I don’t begrudge anyone making money as long as they are honest with their audience (or in Amir’s case, honest with themselves). Amir will say he makes a comment on all his reviews that his company sells Revel and that’s all the disclaimer he needs. I agree. But he chastises every single other reviewer who does essentially the same (i.e., advertising, YouTube monetization, affiliate links which are labeled clearly, etc).

This is wrong on multiple fronts:

1. I don't chastise youtubers for making money. I am an avid viewer of youtube product reviews which without exception are monetized multiple ways as you state.

2. I never put any link or other information for people to buy any product from me or my company. I don't even say what my company does unless asked.

3. Every review immediately starts with the source of the product: member loan, my own purchase or company. Almost no other audio reviewer I know does this.

4. ASR has incredible potential for monetization given our massive traffic and number of products I review. But I will not go there. I don't need the money and certainly don't need to have it cloud the transparency of the reviews.

A couple of days ago a company offered me money to get their gear tested sooner and I told them NO!

5. As a policy and core principle, we will NOT allow our people to try to monetize our traffic/membership for their own interest. This is a universal rule that is heavily enforced by other forums. We are actually more lenient to a fault here. Go ahead and spam this forum with sponsored links and see how long you last.

Bottom line, you are completely out of line with your comparison.

|

I never said the LRS was perfect. It IS a perfect example of where measurements fail.

Well, measurements show why it is not perfect. For measurements to fail, would have been if it didn't show that!

|

Additionally, I have noticed that whenever JA measures a large floor standing model, like a panel or other design, he always mentions that this is a factor in his results....I would suspect this is something that Amir might want to remember.

I don’t need to because my measurement system (Klippel NFS) doesn’t care. JA is performing manual measurements of speakers outside so size of the speaker is a major barrier for him.

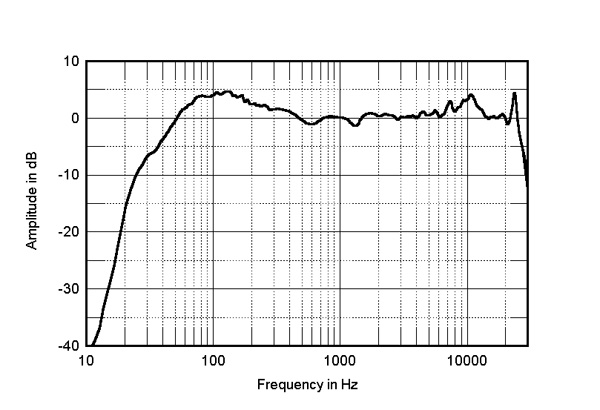

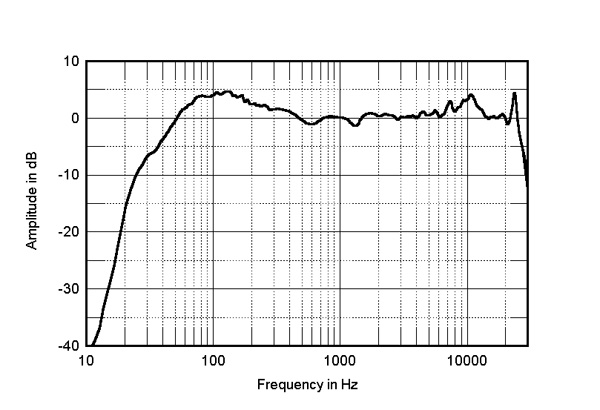

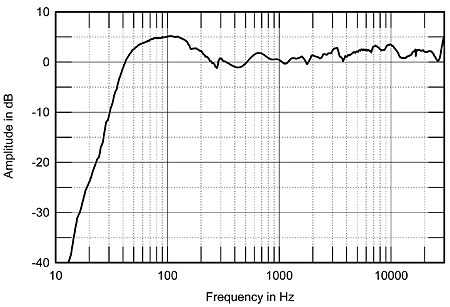

Note that JA’s measurement method exaggerates bass response due to use of near-field measurements (it assumes the baffle is infinitely wide which obviously it is not). If you look, he always shows a hump there. Here is the Perlisten S7t measruement:

See that hump between 80 and 300 Hz? It is a measurement error (some or all of it). Its exact frequency and shape varies with speaker size/design.

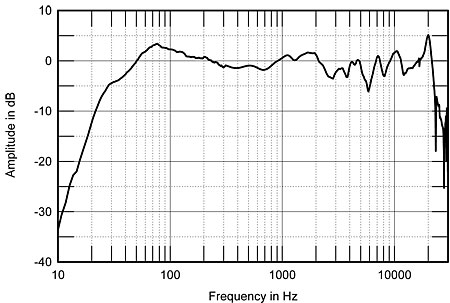

Here is the Wilson Sasha W/P:

See the hump around 80 Hz?

Monitor Audio:

JA is fully aware of it but doesn’t apply a correction leaving you guessing as to what the response really is. Fortunately sometimes he say this in the review.

He badly needs the same system I have but he is not going to get the funding from his subjectivist heavy owners and editors.

FYI I have measured 300 speakers now and my work gets scrutinized by manufactures down to a dB at times! So please be careful in making accusations of lack of knowledge on my part. It is unkind and wrong.

|

Amir states this is a fault of the speaker, well sure it is, but there are options to address the issue..called sub woofers.

Easier said than done. If you deploy one without DSP and measurements, you are going to be lost forever trying to integrate the two.

Regardless, if one speaker has usable bass and the other doesn't, the later deserves lower score than saying, "oh you could add a sub to it."

|

@amir_asr You have said that you have been insulted here by myself and others, yet you have the temerity to post what you just did about the experience level of all audiophiles. You put all of the group into one basket, that of being clumsy and ignorant consumers..and therefore easy marks. Not ok in my books.

What did you get insulted by and who says "ALL" audiophiles are alike? Countless audiophiles are on ASR following and believing in audio science and engineering.

I am talking about people we both know that change a screw in an AC outlet and say another veil was lifted from the sound. Maybe it was, maybe it wasn't. We would only know if he did a simple blind test of that. He didn't and observing that doesn't make what I said an insult.

|

After several tests, the designer then made a conscious decision to let it be, since it sounded much better in its original untamed state after extensive listening tests. This is what many of us mean by "listen first and then measure". Putting more emphasis on listening and what sounds best as a means to an end, rather than making graph lines flat.

Oh I perfectly know what you mean. Before starting Audio Science Review, I co-founded a forum specifically focused on high-end audio. Folks there spend more on audio tweaks than most of you spend on your entire system there! That is where @daveyf and I met. So there is nothing you need to tell me about audiophile behaviors this way. I know it.

Here is the problem: there is no proof point that the assertion of said designer is true. You say he did "extensive listening tests." I guarantee that you have no idea what that testing was let alone that it was extensive. What music was used? What power level? What speakers? How many listeners? What is the qualifications of the designer when it comes to hearing impairments?

Story is told and believed. Maybe it is true. Maybe it is not. After all, if he saw a significant measurement error, logic says the odds of it sounding good is low. After all, why else would you tell that story? If the odds are low, then we better have a documented, controlled test that shows that. Not just something told.

BTW, the worse person you want to trust in these things is the person with a vested interest. I don't mean this in a derogatory way. Designer just want to defend their designs and be right. So we best not put our eggs in that basket and ask for proof.

I post this story from Dr. Sean Olive before but seems I have to repeat it. When he arrived from National Research Council to Harman (Revel, JBL, etc.), he was surprised at the strong resistance of both engineering and marketing people at the company:

To my surprise, this mandate met rather strong opposition from some of the more entrenched marketing, sales and engineering staff who felt that, as trained audio professionals, they were immune from the influence of sighted biases.

[...]

The mean loudspeaker ratings and 95% confidence intervals are plotted in Figure 1 for both sighted and blind tests. The sighted tests produced a significant increase in preference ratings for the larger, more expensive loudspeakers G and D. (note: G and D were identical loudspeakers except with different cross-overs, voiced ostensibly for differences in German and Northern European tastes, respectively. The negligible perceptual differences between loudspeakers G and D found in this test resulted in the creation of a single loudspeaker SKU for all of Europe, and the demise of an engineer who specialized in the lost art of German speaker voicing).

You see the problem with improper listening tests and engineer opinions of such products?

These people shun science so much that they never test their hypothesis of what sounds good. Not once they put themselves in a proper listening test. Because if they did, they would sober up and quick! Such was the case with me...

When I was at the height of my listening acuity at Microsoft and could tell that you flushed your toilet two states away :), my signal processing manager asked me if I would evaluate their latest encoder with their latest tuning. I told him it would be faster if he gave me those tuning parameters and I would optimize them with listening and give him the numbers.

I did that after a couple of weeks of testing. The numbers were floating point (had fractions) and I found it necessary to go way deep, optimizing them to half a dozen decimal places. I gave him the numbers and he expressed surprise telling me they don't use the fractions in the algorithm! That made me angry as I could hear the difference even when changing 0.001. I told him the difference was quite audible and I could not believe he couldn't hear them.

This was all in email and next thing I know he sent me a link to two sets of encoded music files and asked me which sounded better. I quickly detected one was clearly better and matched my observations above. I told him in no uncertain terms that one set was better. Here is the problem: he told me the files were identical!

I could not believe it. So I listened again and the audible difference was there clear as a day. So I perform a binary test only to find that the files were identical. Sigh. I resigned my unofficial position as the encoder tuner. :)

This is why I plead with you all to test your listening experiences in proper test. Your designer could have easily done that. He could have built two versions of that amp, matched their levels and performed AB tests on a number of audiophiles blind. Then, if the outcome was that the less well measuring amp was superior, I would join him to defend it!

|

Believe or not, there are folk here who have extensive experience and are not just shopping with their eyes and attracted to the highest price anything.

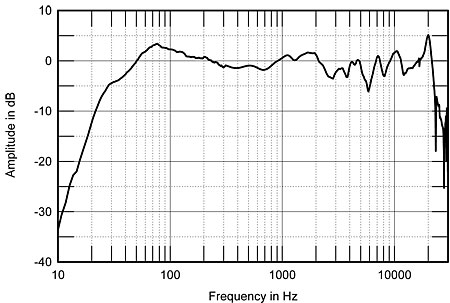

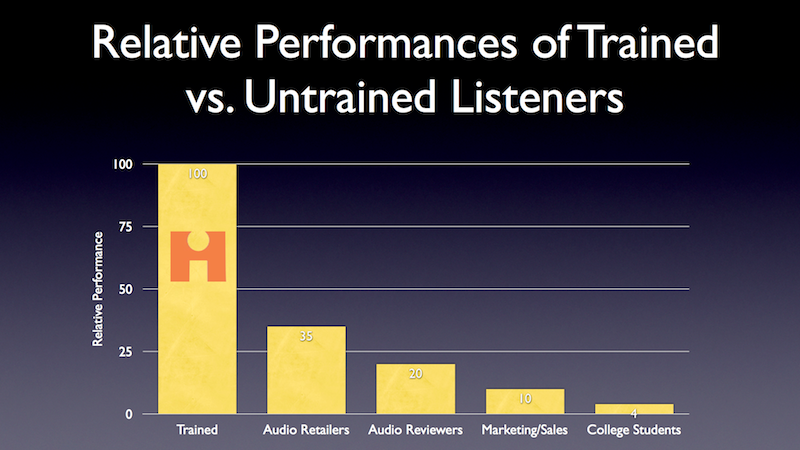

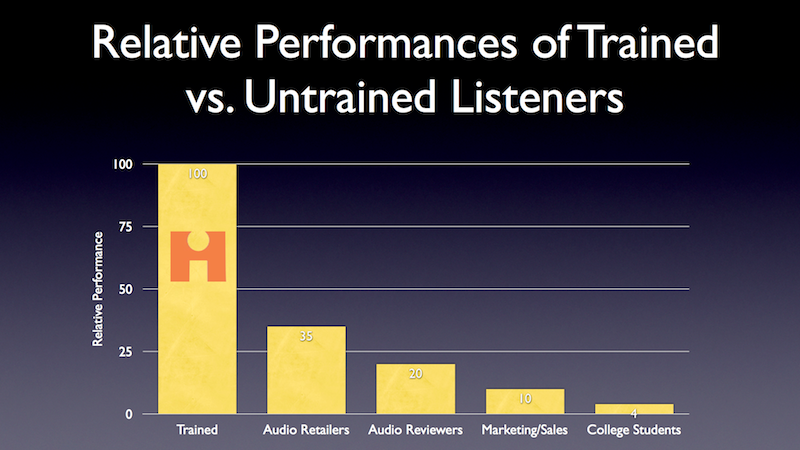

Ah the self-serving "experience" bit. That much abused word. This is how such experienced listeners as audio reviewers did in test speakers;

You want to explain to me why Audio Reviewers were producing such unreliable listening test results when evaluating speakers in a blind test? That they could not repeat their own outcome when comparing speakers? Experience didn't help them. Nor did it help audio retailers and marketing/sales.

Proper "experience" was in the form of trained listeners. They followed the proper path to get trained and their skill was proven in such tests.

So please, don't use these cliches with me. The whole purpose of my post was that I know what you speak of. Experience....

|

Petty BS, JA makes clear its a nearfield response and that's exactly what is shown. Your measurements show the anechoic response with baffle diffraction loss aka step. BOTH methods show only approximate output depth extension, NEITHER can predict the actual in room LF response, which will dominate.

Sorry, no. JA's measurements assume you flush mount the speaker in an infinite wall. No stand alone speaker is used that way. As such, his measurements overexaggerate the bass energy. JA states the same:

"The usual excess of upper-bass energy due to the nearfield measurement technique, which assumes that the radiators are mounted on a true infinite baffle, ie, one that extends indefinitely in both horizontal and vertical planes, is absent."

There is no way for you to predict where a speaker is located in a room as to provide any diffraction loss compensation. This is why CEA/CTA-2034 standard calls for full anechoic response of bass, not a near field one with above stipulation. And that is what I, Genelec, Neumann, PSB, Revel, etc. all do.

Once you put a speaker in a room, the response will radically change in bass. For that reason, the job is not done when you get a well measuring speaker. You need to measure and correct for response errors. But you don't want to start with faulty measurements thinking a speaker designer didn't know how to design flat response and put that hump in there as seen in Stereophile measurements.

I hear you wanting the crude near-field measurement to be right as to then enable you to post them and say, "see, I have them." But you don't since your speakers are not flush mounted on infinite walls.

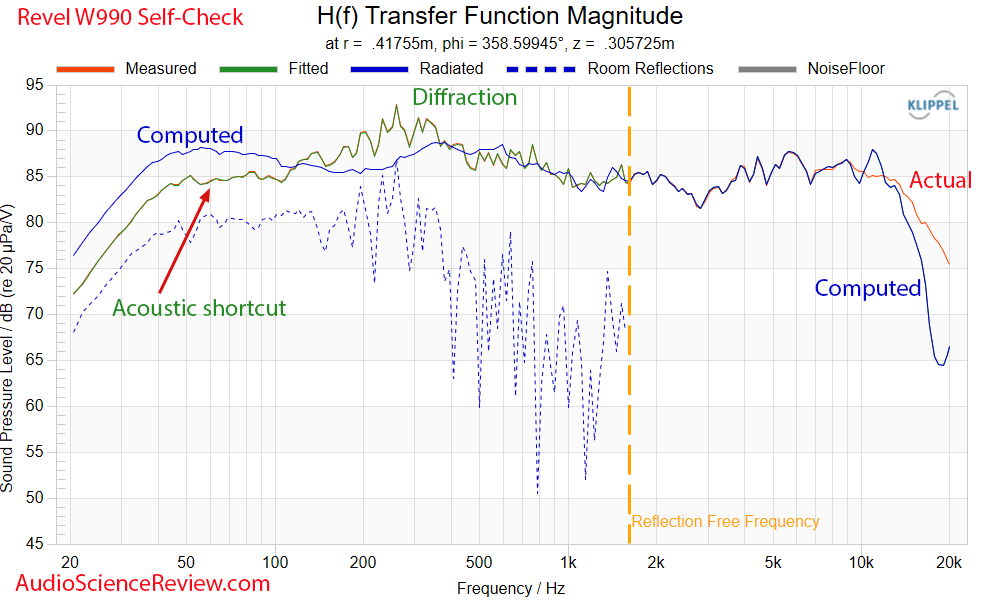

BTW, Klippel NFS has capability to measure in-wall speakers with that assumption. It will get rid of baffle diffraction and back wall reflections. Here is an example with the speaker mounted in small baffle:

And here are the computational analysis of error components:

You can see how Klippel NFS I use has properly computed the radiation from back of the speaker ("acoustic shortcut") and subtracted it out because in real use you would not hear it. Diffraction losses from the edges of my baffle are also found and subtracted. The system is also self-checking allowing you observe its accuracy.

Bottom line, Klippel NFS is a $100,000 system designed to solve these problems and give you a true picture of the radiation pattern of a speaker independent of where or how it is measured.

|

@mahgister

The point on which i disgree with Amir is not his measures set usefulness, is exclusively about extending a set of measures as synonymous with sound perceived qualities because...

And you don't care, no matter how many times I have stated it, that the above is NOT my position. :(

Measurements tell you if a system is deviating from perfection in the form of noise and distortion and neutral tonality. This, we want to know because they are opposite of what high fidelity is about. We want transparency to what is delivered on the recording.

When measurements show excess noise and distortion, that is that. The system has those things and if they rise to point of audibility, you hear them. Best to get a system that minimizes that so you don't have to become an expert in psychoacoustics to predict audibility.

Your argument needs to be that given two perfectly measuring system, one will sound better the other. To which I say fine, show it in an ears only, controlled listening test. Don't tell me what a designer thinks will happen. Just show it with a listening test.

You say the ears are the only thing that can judge musicality but when I ask you for such testing, you don't have one and instead you quote words for me or what is wrong with measurement. We want evidence of the hypothesis you have. Not repeated statement of the hypothesis.

BTW, if such a controlled test did materialize, it would be trivial to create a measurement to show the difference. We will then know what it is that is observed. When you don't have anything to show from what was tested, what music was used, what listeners observed reliably, etc. there is nothing there to analyze.

|

@mahgister your points are valid to state. I’m not as savvy on audio science. I admit that. I also admit that science is really important with audio gear. Just as it is with medicine and improving peoples vision for example.

My analogy isn’t scientific but is based in fact. You cannot strip out the subjectivity of audio.

No one is trying to take out the subjectivity from audio. The entire science of speaker and headphone testing relies on it extensively. Problem with using the ear in evaluating things is that it can be difficult to do it properly. So what to do? Give up and let any and all anecdotes rule the world? No. We research and find out what measurements correlate with listening results. Once there we use the measurements because they are reliable, repeatable and not subject to bias.

If there is doubt about measurements, we always welcome listening tests. We only ask that they be proper: levels matched and ears be the only senses uses.

Just like you can’t do it with food or anything to do with taste. You can’t measure taste. You can’t quantify it but it is there.

Per above, many times we can quantify it. The entire field of psychoacoustics is about that: *measuring* human hearing perception. You just need to do properly as I keep saying it. Food research is done that way with blind tests. There are no controversies there. But somehow audio is special.

Audiophiles hugely underestimate the impact of confounding elements in audio evaluation. Reminds of some research that was done in Wine tasting. Tasters were given two identical wines but told one cost $10 and another $90. Here is the outcome:

"For example, wine 2 was presented as the $90 wine (its actual retail price) and also as the $10 wine. When the subjects were told the wine cost $90 a bottle, they loved it; at $10 a bottle, not so much. In a follow-up experiment, the subjects again tasted all five wine samples, but without any price information; this time, they rated the cheapest wine as their most preferred."

See how strongly price comes into the equation here and how removing that aspect in a controlled test was the key to arriving at the truth of what tasted better?

They go on to say:

"Previous marketing studies have shown that it is possible to change people's reports of how good an experience is by changing their beliefs about the experience. For example, says Rangel, moviegoers will report liking a movie more when they hear beforehand how good it is. "Our study goes beyond that to show that the neural encoding of the quality of an experience is actually modulated by a variable such as price, which most people believe is correlated with experienced pleasantness," he says."

As you see, we are wired this way to pollute our observation with what we think in advance of such tests. It reasons then that if we want to know the truth about audio performance, that all these other factors are eliminated. Otherwise we would be judging the price, etc. and not the sound.

Of course without going to school for a day, marketing and engineers alike in audio have learned the above. They know that all they have to do is have a good story and high price and sale is made. No need for any stinking controlled test proving anything. Just say it, folks get preconditioned, and you are done.

|

|

Once again, JA makes it clear its a nearfield measurement without correction, it's up to the viewer to read his speaker measurements section.

He doesn't make it clear. Most people will have no idea what I quoted means. They see a graph and run home thinking the designer screwed up.

NFS is a great tool, but certainly not mandatory for knowledgeable designers.

Go and ask Ascend. Since purchasing NFS, post my measurements of their speaker with the same showing serious issues, their design has been hugely transformed. They can get full 3-D radiation of a speaker in 3 hours and iterate on the design on daily basis. In contrast, garage show operations like yours will make a crude gated measurement or two and call it the day.

Yes, if you spend the time and energy as you linked to in ASR link, you can get proper measurements. But that is not what JA is doing. And certainly not what you are doing on daily basis.

So yes, what else is new. Garage shop operation sells speakers for $15,000 but works hard to say a) you don't need to see any measurements of said speakers and b) incorrect measurements claimed to be correct.

|

When I hear what is pleasing to me, I could not care less about your “measurements” or your elitist, self indulgent, and frankly insulting “ difficult to do it (hear things) properly” bs.

Your kind of hearing test is easy. The kind that generates reliable data about the sound can be difficult at times. Other times though, it is trivial to do. But folks don't want to be bothered to know the reality of what they hear. They prefer to stay in the illusion. I get it. It is the Matrix movie all over again.

|

Amir keeps on posting that graph on trained vs. untrained listeners. What is a trained listener...someone who has passed the Harmon test, and who now believes he/she can tell what a musical sound sounds like, better than the ’unwashed’ masses.

Harman offers that training tool for anyone who cares to use it. I highly encourage you to at least try it before opining this way. Until then, let me explain what it is.

You are presented with music that has an EQ applied to it. You get to tell what that filtering is. It starts easy with very wide band filters but progressively gets narrower and hence more difficult. With practice, your hearing acuity for tonal errors improves and with it, reliable ability to determine colorations in speakers.

Importantly, the test has nothing to do with "harman" or any speaker or technology. It is a pure test of whether you can tell what coloration a speaker imparts -- precisely what we want to determine in such tests.

FYI, after just limited amount of training using above software, I attended a gathering at Harman of top acousticians. Dr. Olive took us to their listening room and played the training test. Everyone got to level 2 or 3 but from there, they became silent as they could no longer detect the differences. I went to level 5 and 6 to shock of everyone there. Before you think I am gloating, Dr. Olive sailed way past me with incredible ease! Here is a picture of him at the meeting doing this:

You can faintly see the image of the training software on the screen.

Some things need proper training. You can't be self-thought in everything especially when you have not passed any test to determine what you really know. The reviewers got tested. They didn't know what they were doing.

|

@nevada_matt

what is “my kind”?

The only thing I think I know is your name: matt. Tell me more about how you evaluate the sound of audio systems and I can answer more.

|

Great, then you shouldn't avoid at all costs a controlled listening text vs your speakers at PAF 2024 correct?

No, it is not "great." I am not the one that has to work to demonstrate the value of what you are selling. That is your job.

To wit, you haven't even post a measurement of the speakers you sell. Some cost as high as $15,000 yet all we have is a picture of them. Post some measurements as starters. Then write an article on any formal listening tests you have performed. Once we have these, then maybe we care to see what you have to offer. Until then, what you are or are not selling is not remotely important to me.

|

Two conflicting statements in one single paragraph: keep the subjectivity in audio, but then intervene so that very subjectivity (I.e anecdotes) does not “rule the world”. Hmmm….

Subjectivity can 100% rule the audio world! Have every manufacturer with claims of audio superiority provide controlled listening tests where only the ear is involved and I will retire from what I am doing and get more gardening done.

What you want is different: you want the self-serving views of a designer be the arbiter of what is right and good instead of relying on the ears of a few of your fellow audiophiles in a controlled test. I just can't join you in that nor can huge swath of the audiophile community.

We want unbiased results and data. Why is this so hard to understand?

|

It’s not so much about what you do in your site, which has clearly value, but it’s your (and your followers’) campaign in all audio forums to shut down every subjective discussion (such a hobbyist simply sharing their listening impressions with each other, on anything audio related).

Those shut down requests have been repeated made regarding my posts here. I am not seeing you react negatively towards those.

But yes, there are some people who are extremist in both camp. As I have explained and show, @soundfield is one of them. They give our cause a bad new and I am sure the same is true of some in your camp.

We need to get beyond that and judge the here and now. I am fielding a ton of hostility from a number of posters in this thread and others like it. Which I can take as you can well see. But you can’t complain in this context why some objectivists do this and that. Both camps need to stick to what they can demonstrate as proof and value add as opposed to angry responses.

|

That won’t matter to your ears in a controlled listening test. Your speakers measure well, rank highly in controlled listening and cost more. Its seems you would have zero to fear.

That’s right. My Revel Salon 2s have excellent measurements and perform just as well in controlled listening tests. This sharply increases others liking them. John Atkinson at his talk at RMAF was asked what was his favorite speaker after testing and listening to 750 of them. His answer? Revel Salon 2:

https://youtu.be/j77VKw9Kx6U

He says: "I wept before I had to send them back."

Of course, they have to perform given how expensive they are.

Against this landscape, you want to just jump into a ring and compete. I suggest while you are waiting a year for PAF audio show that you

1. Send your speaker with a $2,000 check to Workwyn folks to properly measure your speaker. Listen to their feedback and correct errors they find. For a bit more money, they can even test your drivers using laser Interferometer and such.

2. Build the turntable or shuffler to handle large and heavy speakers. My speakers weigh 120 pounds each. It is non trivial to swap it against other speakers which I assume are just as heavy. We have a member who uses an engineering friend to build him one for bookshelf speakers. You can contact and chat with him on challenges he faced.

3. Perform such blind tests yourself. Don’t just use yourself as a listener. Invite a few local audiophiles and put them through the test. Put in a control (really bad speaker) to weed out listeners who clearly can’t tell the good from bad.

Once you do these things -- which any speaker designer must do -- then I say you are ready to put people through a public blind test. For that, you don’t need me. Just have visitors go through it and collect the data across the population. Again, put the control in there to make sure people know what they are doing.

But really, the show is not the educational part. All the other stuff before that is what you need to do. An Olympic swimmer doesn’t become a champion if he just waited for the Olympics to come. You need to put in the work before.

|

@raysmtb1 he is not doing this for free. Laughable. He has a patreon. Every single review asks for donations. He doesn’t disclose how much the website makes but it’s surely not nothing.

It is for "nothing." Actually less than nothing. There is no business plan that would support what I am doing. I just bought a $22,000 dummy load to emulate reactive loads for speaker testing. You know how many donations it would take just to get that money back? You didn't see me going and getting sponsorships from companies to do that as others do. Members expressed interest in more tests, I agreed and wrote the check -- in a declining economy no less where our investments are worth much less than a year ago.

When someone sends me gear to test it, I almost always pay to ship it back. By your logic, I should keep coughing that up out of my pocket so I can't be accused of doing something for money.

And let's say I didn't ask for donations. Which one of the arguments in this thread would go away? Answer is none. Complainers will complain.

Fact is that your fellow audiophiles are the ones suggested that I accept donations. I thought about it and I agreed it would help expand the work, allowing me to test things that I would not otherwise test.

If you think this is a money making venture, why don't you make an offer to buy it from me? Remember, you would have to buy all the gear, learn to use them and produce near daily reviews. And come to a place here to defend your work and personal reputation. I would love to hand this off to someone else and go and enjoy my other hobbies.

|

I have 2 that handle 330lbs and have done such testing for a while, both mono and stereo. You can't know any of this ensconced inside your little kingdom.

You only need to concern yourself about one thing, taking a controlled listening test in public.

PAF 2024

So no measurements are in our future? Nor any documented case of above shuffler being used? Your only interest is to duke it out with me at a show? Is that right?

|

|

Hopelessly biasing you like your reviews.

Since a garage show operation can't have better measurements (from your limited understanding) , have no fear.

Now measurements of speakers are not useful because they bias people? Which way? They make people think they are bad?

|

@mahgister

ANY DEESIGNER USE SINE WAVE PULSE ...Van Maanen too... But he use also real music busts ... Is it too much difficult to understand why ?

There is nothing there to understand. You have no information on what music was used. How the testing was done. Who were the listeners. What was compared to what. You are asking me to believe in something that even you don't know about.

|

Duke it out?? You're going to do a public listening test with your favorite speakers.

Both you and the Youtube audience should have lots of fun. Its listening to music Amir, not an octagon cage match. Have no fear.

What is your fear of posting measurements and results of controlled tests? Do they not exist? Are they not flattering?

|

@mahgister

Why did Amir got it wrong ?

"Why?" You haven’t covered the "what." You said people shouldn’t use measurements to assess fidelity of amplifiers. I showed you that your own expert witness in two occasions used tones and measurements. And that the disconnected sine waves in his paper has zero resemblance to any music. How come he can do it but you complain about me?

Answer is that sine waves are a subset of music. If an amplifier is high fidelity, it better ace the simple signals. If it manages to screw that up, why do you hang your hat on music?

Really, it is the holy grail audiophile claim that "something that measures bad sounds good." As to shout "science doesn’t matter." Well, for the millions of times this is stated, not one person has provided a proper listening test to prove this.

I actually think it is possible to show pathological cases where the above is true but folks are not even trying. So trusting they are that people will just believe the salesman/engineer and give them the ticket to produce less peformant amplifiers while charging so much more for them! It is such inverted logic and remarkable that it works with people.

Fortunately this is changing. We are making that change. We are taking some control of our destiny and driving toward proper, transparent audio gear that can be shown to be so.

|

Measurements are all I use, so they are very useful, but for you prior to review, a bias.

I am not reviewing your speakers. I want to see the measurements so can put some meat behind the bold claims you are making regarding their performance. Your unwillingness again shows you either don't have proper measurements or that they show too poor of a performance.

You are also contradictory. You have no issue biasing people with the way the speaker looks. or how it is designed. But ask for some objective data and oh, "that will bias you." Well, hell you already biased everyone.

|

We know Amir, we know. But you'll have to trust your ears for once.

But what about those measurements? I would imagine if they are complimentary, as the company salesman you would want people to see them. Ergo, they must not paint a good picture of either your skill in measuring them, or flaws they expose.

|

Exactly. I believed something "told" once. Thought I’d put it to the test myself. It was about a really great measuring DAC on said forum. All looked good on measurements and graphs. I took the plunge, went out and bought it with intent it would be a long term keeper DAC. Played it for just under two weeks. Unfortunately, ended up sending it back for a full refund and kept a different unit that measured worse.

Sad as if you had spent a modicum of effort performing a blind test, you would have arrived at a very different conclusion. But no, you wanted to involve your eyes and ignore elasticity of your brain and its poor recall memory.

Reminds me of someone who sent me a Schiit Yggdrasil $2,500 DAC. He told me it sounded much better than a well measuring Topping DAC. I asked him how he tested. He gave me his tracks and said he used Stax headphones which I also had.

I went to setup an AB test and it was clear that levels were not matched between the two. I matched them and then performed a blind test. With normal volume, there was no difference whatsoever between the Topping and Schiit Yggdrasil. Again I replicated his entire setup. And this was the conclusion.

The problem continues to be that people believe in random testing of the gear where many factors are involved beyond the fidelity of the two products. And then complain when their observations don't match science and engineering. Well, you can't mix non-science and science.

So tell your stories but not to me please. Come back when you can at least be bothered to do an AB test without your eyes involved.

|

Thanks to those in business who understand this and offer a helpful refund policy. Not everyone is going to like the sound of their designs.

The "sound" was the first thing that was sacrificed in your testing. I was once talking about to a rep for a product line. He told me he was the #1 salesman in selling CD players at the high-end store near us. I asked him how he did it. He said he would first play music on the cheap player. He would get the customer to like that. And then he would take him to show him the $4,000 player. He said all he had to do is push the eject button and watch the drawer come out with fluid motion and the sale was made!

So no it is not about sound. Folks are easy pray when they use their eyes to eat....

|

My ears, my preference, is #1. Summary, what measured better did not sound better.

Incredible that people with eyes wide open continue to claim that they only used their ears. No matter how much you show them that such testing produces completely wrong results, they cant even be bothered to state what they really did. "My ears." No, it was not your ears. It was your ears, eyes and a brain that loves to please you by telling that higher priced items must sound better.

I know you disagree. To do so, you need to come back with an ears only test. Why is it so difficult to accept when all you talk about is "trust your ears?" How come you must see what you are testing? How come you don't realize that if levels are different, preference shifts just because of that?

|

You’ll be listening to them, same thing. You didn’t see measurements at Harman of the various speakers prior to listening on the shuffler. Trust your ears Amir, have no fear. Your speakers measure just fine, don’t worry.

I am in no position to listen to your speakers. I like to see the measurements. Why are they not forthcoming? You understand that measurements provide incredible value beyond anything a listening test shows, yes?

|

|

Yes we can. And, there it is for all to see. My goal was to further expose more of your self-inflicted BS to everyone here. Wishing you best of luck on the future!

The only thing you exposed is that the most basic fundamentals in how you assess sound fidelity is not know or appreciated.

Give me that poor measuring DAC, and i will put it in an old beaten up box that looks like a $20 audio gear from 1970s and i will guarantee that everyone like you will hate its "sound." And heap praise on the well measuring gear you said didn't "sound" as good.

You can't be this proud of ignoring how your brain works. Are you?

|

Listening test says hey...these speakers sound what we feel is as close to the real as we have ever heard; measurements say they sound like mud with a dollop of distortion on top...who here is a buyer?? ....;0)

The problem is, you are short of any bias controlled listening tests that show this. You keep saying that such exists, but can't even remotely demonstrate it. As I said should be possible to at least create a contrived one but you don't even have that.

For our part, we have large library of tests that show the opposite of what you claim. That good measurements often do predict better sound. Take this Audio Engineering paper:

Some New Evidence That Teenagers May Prefer Accurate Sound Reproduction Sean E. Olive, AES Fellow

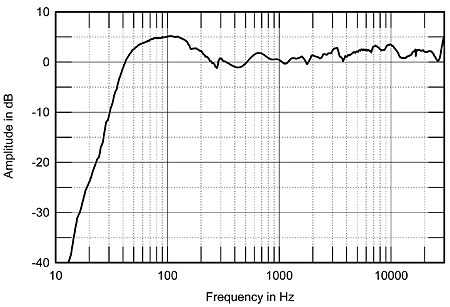

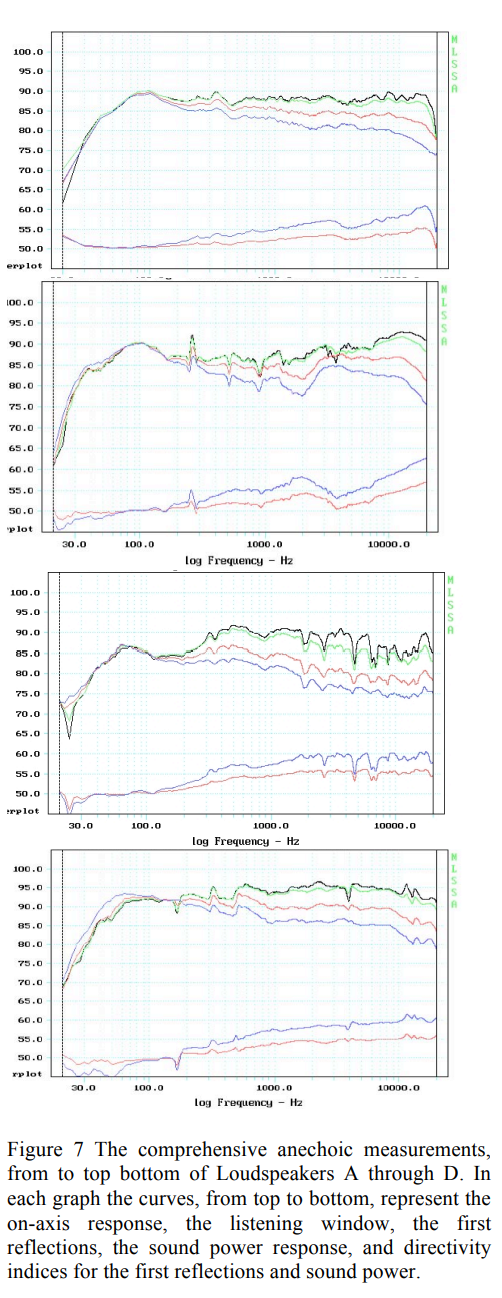

Check out this set of measurements ordered from top being A and bottom D:

See how clean and tidy the top speaker is and how "muddy" the measurements are in the rest? Keep that in mind as you read the results of the listening tests:

There are some clear visual correlations between the subjective preference ratings of the loudspeakers, and shape and smoothness of their measured curves. The most preferred loudspeaker (Loudspeaker A) has the flattest and smoothest on-axis and listening window curves, which is well maintained in its off-axis curves. In contrast to this, the less preferred Loudspeakers B, C and D all show various degrees of misbehavior in their magnitude response both on and off-axis. Loudspeaker B has a “boom-and-tizz” character from the overemphasis in the low and high frequency ranges, combined with an uneven midrange response. Loudspeaker C has a similar mismatch in level between the bass and midrange/treble, in addition to a series of resonances above 300 Hz that appear in all of the spatially averaged curves. Loudspeaker D has a relatively smooth response across all of its curves but there is a large mismatch in level at 400 Hz between the bass and the midrange/treble regions. Together these irregularities in the on and off-axis curves are indicative of sound quality problems that were reflected in the lower preference ratings given to Loudspeakers B, C and D.

We are so lucky that what our ears pass for good sound, also passes the test of logic: that we want neutral sounding speakers. Not something that screws up the tonality of our content. That is again, if we just listen and don't look.

Above is also the reason I keep asking @soundfield for measurements. This should always be the first question you ask of any speaker company. If they don't have measurements are are afraid of sharing them, run, and run fast.

|

instead of believing what I posted about Halcro’s, I suggest Amir buy a set of their amps, he will marvel at their spec’s, and he will probably enjoy their sound. Plus, he can get a very good price on them…I wonder why? LOL.

The feedforward technology in that Halcro amp (and prior, from Kenwood and others), has completely transformed the headphone amplifier market. THX reintroduced it by eliminating the inductor in the design and with it, make a giant leap in distortion and and noise. Drop.com shipped an amplifier with it in it and changed the industry forever. The amplifier was raved about by both objectivists and subjectivists.

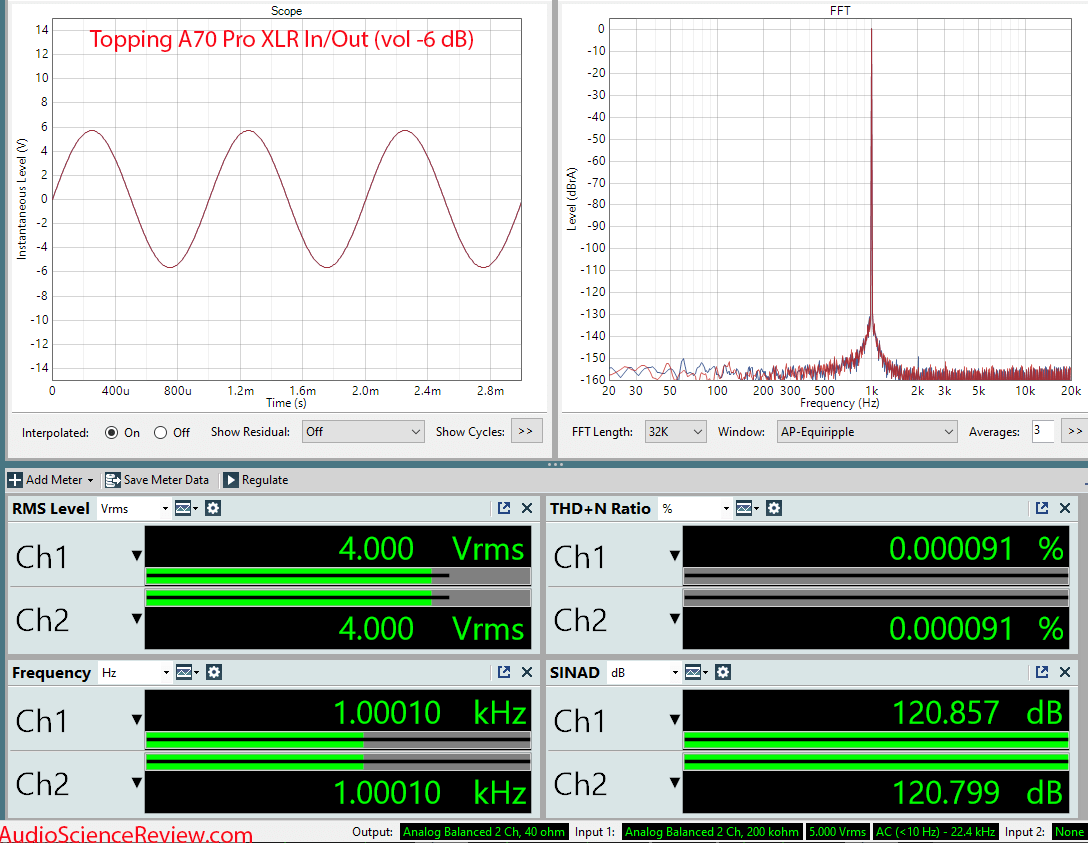

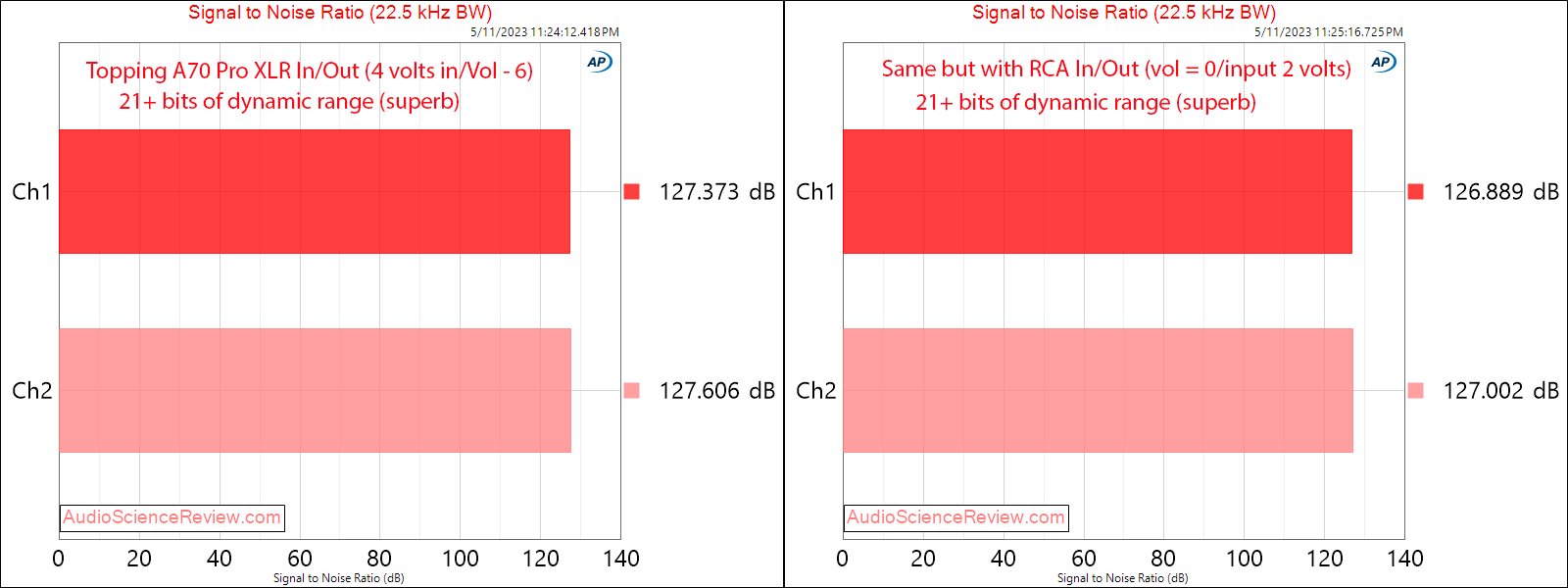

The THX design then created an arm race among a number of companies to even better its performance. They used a composite op-amp technology (op-amp in feedback loop) which avoided THX patents while producing even lower levels of noise and distortion. Topping was the first company to do this. Check out their latest incarnation, the A70 Pro:

Check out the stunning performance as far as distortion and noise:

Distortion is at whopping -150 dB. As a way of reference, best case hearing threshold is -115 dB. We now have 35 dB of headroom!

The noise performance is better than the best DACs even though this amplifier produces more power:

At $499, this headphone amplifier costs less then the shipping cost of many high-end gear!

It is this kind of transformation which is fueling interest in what we do at ASR. The measurements have created a closed loop process with clear goals of what needs to be done to create state of the art audio products.

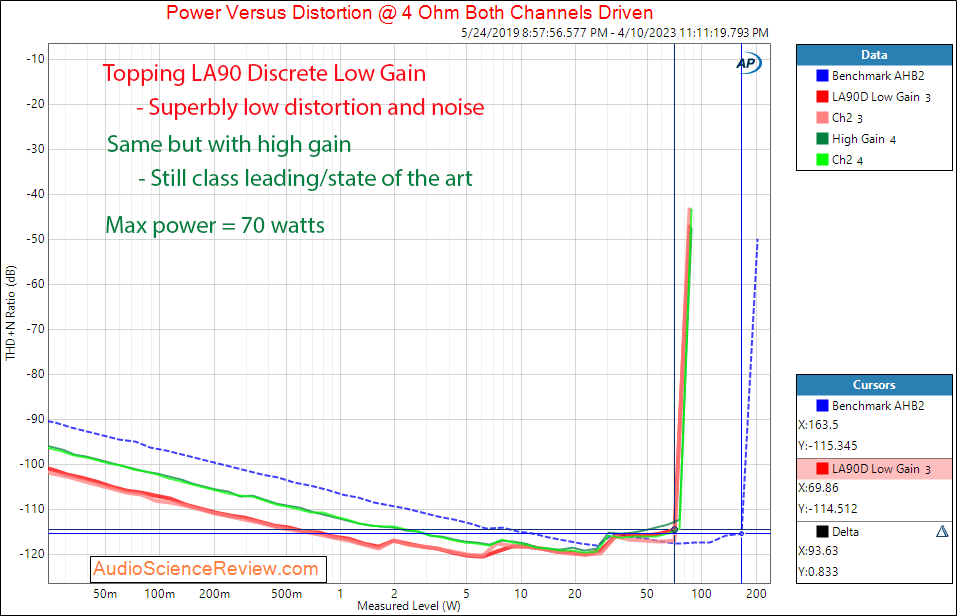

Same technology is now used in low to mid power power amplifiers with similar stellar results. Again here is Topping LA 90 Discrete:

It now beats Benchmark AHB2 which was also based on same feedforward technology as Halcro/THX:

Will be interesting to see if they scale it up in power some more.

Net, net, there is an incredible world of technology that you are not aware of. It is advancing in real time and provide incredible pleasure to us as true music lovers who want full transparency to the source.

"LOL" indeed but not in the way you meant it.

|

Years back, when Halcro first came to market, one of their claims to fame was that they had amps that were exhibiting such amazing measurements that they were ground breaking. No other manufacturer could deliver a product with the type of measurements that these amps delivered. Problem was that as soon as any reviewer with half a decent ear listened to them, they were pretty much dismissed as not good sounding at all!( even though JA and others did measure them and were astounded by what they found, which conformed to Halcro's marketing).

I don't know such history but the Internet does. Here is JA's review of Halcro DM38:

Sound

"To this day, I have yet to hear any amplifier that equals the dm58's combination of complete neutrality, harmonic generosity, lightning reflexes, and a sense of boundless power that is difficult to describe," was how Paul Bolin summed up his experience with Halcro's dm58 monoblock. It also nicely describes my reaction to the dm58 when the review pair briefly spent some time in my listening room.

Ahem. It is not going your way, is it? :) Continuing:

The dm38 didn't pale in comparison with my 18-month-old memories of the dm58s. "Awesome dynamics," I noted, after playing Prince's Musicology (CD, NPG 74645 84692 7) two times through after hearing Prince live at Madison Square Garden; "awesome!"

[...]

Perhaps more important, as well as excellent macrodynamics—the differences between loud and soft and how consistent the amplifier's presentation was at the dynamic extremes—the dm38 also excelled at reproducing microdynamics. By this mean I mean how well it preserved the tonal and imaging differences among different sonic objects at different levels.

[...]

The Halcro allowed me easily to identify how each instrument was contributing to the combined tone, regardless of the speakers I was using. At the risk of venturing into the semantic void, it wasn't just that the dm38 reproduced the sounds of instruments or voices with superb fidelity; it also excelled at reproducing the space between those instruments. Remember that the stereo image is an illusion, its fragility due to the brain's having to put aside what the ears actually hear in favor of reconstructing a simulated space between and behind the speakers.

Still not backing your claim. But maybe it is this bit of subjectivity that you are hanging your hat on:

So, the dm38 combined great dynamics and great bass control with a superbly transparent view into the recorded soundstage. Its treble was free from grain and its midrange was as smooth as silk. However, I couldn't escape the feeling that the amplifier's tonal balance was on the lean, cool side.

He ends with:

Summing up

It may be expensive, but Halcro's dm38 effortlessly joins the ranks of top-rated power amplifiers, not only for its sound quality but also its measured performance (not a given; witness some recent reviews).

[...]

Like the dm58 monoblock, the dm38 is balanced toward the cool side of the spectrum—though I am sure Bruce Candy will argue that the Halcro amplifiers are actually neutral compared with the competition—so it will work best with speakers and source components that don't themselves sound lean. But with optimal system matching, the Halcro's effortless dynamics and astonishingly clean presentation will satisfy the listener's soul.

So this matches your definition of "they were pretty much dismissed as not good sounding at all!"??? Really? He couldn't gosh any more than he did. Was he wrong about all of the above? That it can satisfy your soul?

How about this other reviewer:

Conclusion

The Halcro dm38 is among the best amplifiers in the world at any price. Its sound quality easily competes with the amplifiers from Krell, Mark Levinson, Pass Labs, Bel Canto, Spectral, Ayre, Boulder or any of the other players in the ultra-high-end market. At this level of performance, the sonic characteristics of the amplifiers become harder and harder to describe as they become closer to the proverbial "closer to the music" phenomenon. However, if forced to describe the Halcro's sound quality I find the Halcro sound to be similar to that of the Krell FPB series except a bit quicker and with less weight in the bass. For those in the market for a reference grade amplifier with the ability to resolve the slightest nuances, I strongly recommend a close look at the Halcro dm series.

This is your definition of bad review?

No, companies go out of business because in high end audio, it is 80% marketing, 20% engineering. Fail at the former and you go out of business.

|

There you go again, making assumptions that everything is black or white and there is no such thing as "gray" in between. This is common engineer-only type of behavior and reaction. Its to be expected.

Putting aside that my last job was a Corporate VP in charge of a full division including marketing, business, PR and of course engineering, my job is to remove those shades of gray. I strive to find audio products that shrink impairments below threshold of hearing as you see above. This is what gives clarity and confidence to buyers. This is why so many people are gravitating to this science and engineering based method of evaluating audio technology.

High-end audio industry wants that gray fog out there. They want you to not know left from right. They want you think any and all thing can change the sound. They want you to think you all are the greatest listeners there and what one hears has little to do with what another hears. That way, all of you can be simultaneously right. There is a market for everything then.

You cut through the fog by combining multiple disciplines together. We use audio research in advance areas such as perception of sound in a room. We use electronic engineering design to tell the difference from real to imaginary in design. We use measurements to tease out the performance of a product or lack thereof. And we use controlled listening tests when needed to arrive at unbiased outcomes.

Yes, some gray is still left in there. Speakers and rooms are that way and headphones eve more. But outside of that if you think the world is gray, that is just wrong. We cut through that day in and day out ad are happier for it.

|

@amir_asr

I see that your most recent reply to me has been deleted, amir, and I can only assume you have done so, since no one else would have had anything to gain doing so.

Your assumption is incorrect. The post was reported and deleted:

"Hi amir_asr

Your Post was removed by a moderator

2023-07-08 18:18:45 UTC

@kevn Thanks for taking time to reply : ) - may I assume that my having you at a disadvantage is your acknowledgement you had not directly respo..."

Briefly, I congratulated you for getting 6 out of 6 right. I advised you to in the future to capture the output of the program so there is no doubt. I also recommended that you run the test twice in a row. 6 trials is not much and it is possible to get that by guessing. Again, good job but next time be clear when you are asking me a question. Don’t hide your real intentions.

|

BUT, i do not believe that measurements are the B all, and end all, of the decision making process;

This line keeps getting repeated even though I have answered it multiple times. For large class of products, measurements are absolutely "B all." Take fancy wires. Measurements including music null tests and knowledge of engineering conclusively prove that they bring no advantage over generic ones. DACs and amplifiers that beat the threshold of hearing fall in the same category.

Incredible number of audiophiles have put the above to test by trusting the measurements and be better for it. They walk away with full understanding of what these products do and how they are engineered.

they are a data point (pun) and to me..that is all. Listening to a piece of gear in my room and my system is the deciding factor.

Whatever you do is obvious is your business. The issue is that you are advocating for such by posting here. I am here to tell you that most of the tests that audiophiles run are incorrect and generate noise instead of data. It is trivial to get you to like something at home without it having merit.

Reminds of the strategy behind dog treats: "they have to look appetizing to the owner not the pet!" You think a dog cares about the treat looking like a dog bone? No, it is the owner that is made to think it must be tasty treat for the dog, the real facts be damned.

We have simple protocols and rules for how to get proper results with home listening but you don't to go there. Why? Because it invalidates your public point of view and past purchases Well, that can't be helped. The truth is the truth.

So please don't repeat these lines to me. I have addressed them countless times.

|

but if it measures well and sounds marginal, it is no longer in consideration. Do I need to listen blind and with perfect AB comparisons etc., heck no, i trust my ears- and more importantly, what I am attempting to achieve with the sound that i get in my listening environment...and that is all. YMMV.

We have no disagreement on "sound in a listening room" mattering. The problem is that you keep talking about "ears" where in reality you have no idea what went into them. Measurements tell you that but you refuse to acknowledge that.

What you do acknowledge is that you are not following a proper protocol so your brain is forced to only judge the sound in your room. You are refusing to believe that you are human and how a human reacts and perceives stimulus.

Let's say I give you two pieces of equipment that produce identical sound. You vote them as being different sounding to you. You made a mistake, right? But how do you? Without a protocol, you have no idea. You make your judgement and then go online and rave about some product when you could be dead wrong.

Compare that to doing that test blind. Get a friend to switch A vs B 10 times and see if you at least differentiate the two items 9 times. One of two things happen: you do get it all right. Or you fail. See the power of using a proper protocol? How it will guard against you fooling yourself?

Run the above a few times and you will sober up real good. You will no longer go around and say "I trust my ears" because you will have evidence that what you thought was input from your ears, clearly was not.

|

This is exactly the point that Amir seems to be missing. Music is an art form. The reproduction of music is a combination of art and science.

Interesting thought. Should we start rating designers as to how much they know about art? And if they don't, dismiss their work out of hand?

What does an amplifier know about art anyway and how did that knowledge get into it? Can it tell that I am playing nails scratching on a chalkboard from Mozart? If so, how does it do that?

|

@texbychoice

Alas, the story did not end well. After spending $10K on the processor, I spent another $5K to upgrade it.

The snake in snakeoil bit you in the butt. Wonder what kind of reception the above statements would receive over at ASR?

Your confusion of what is snake oil and what we are at ASR are your problem. You seem to think anything expensive must be snake oil. Do you go around saying that about a BMW?

Our mission at ASR Forum is to see if a product is well engineered or not. If it is, then you as a consumer get to decide if you want to buy it. Many times these products low cost but often, they are very expensive. That cost cannot and must not be held against them because it does cost money to produce some products that are pretty, produced locally, have great support & reputation, resell price, etc. For that reason, except in rare cases, price is not a consider for me.

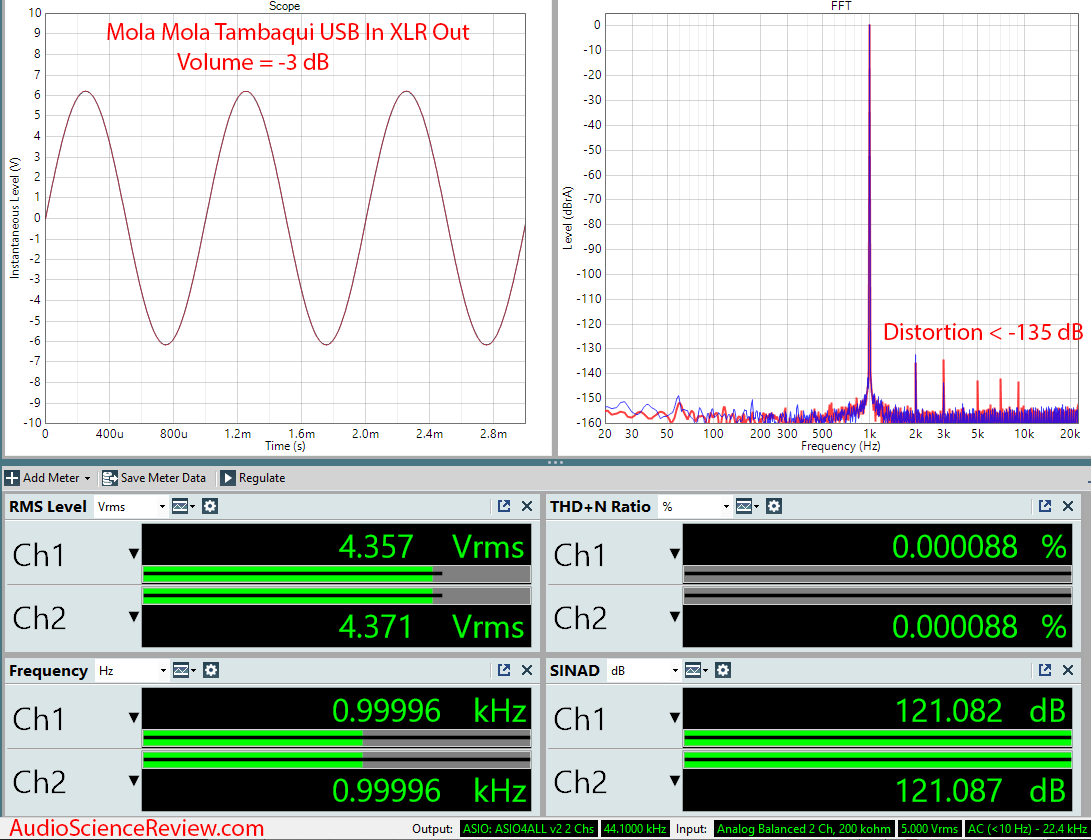

Here is a review of the Mola Mola Tambaqui USB DAC and Ethernet streamer. it costs a cool $11,500.

In case you are not familiar with my "panther coding," it got the highest award I could give it. Why did I do that, because it is superbly engineered to reduce noise and distortion:

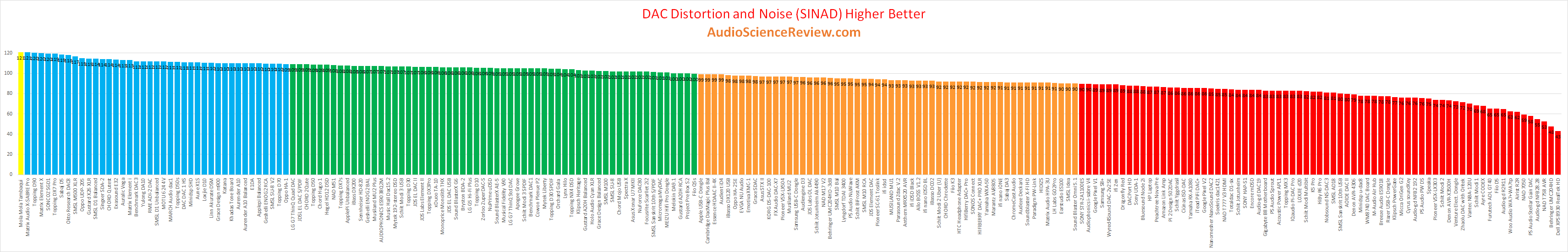

At the time, it shattered all records, landing on top (left) of the SINAD chart:

Here is how I finish the review:

Conclusions

The Mola Mola Tambaqui DAC shows again that just because a DAC is designed from ground up, it need not perform poorly. It is actually the opposite with it performing at the top of the class with respect to distortion and noise.

Since I am not the one paying for it for you to purchase it, it is not my issue to worry about the cost. As such, I am happy to recommend the Mola Mola Tambaqui DAC based on its measured performance and functionality.

While I never use the term "snake oil," members use it to refer to products that a) are expensive and b) don’t meet any of their claims of superiority or effectiveness. Someone mentioned Audioquest USB Cable. This is how that rated:

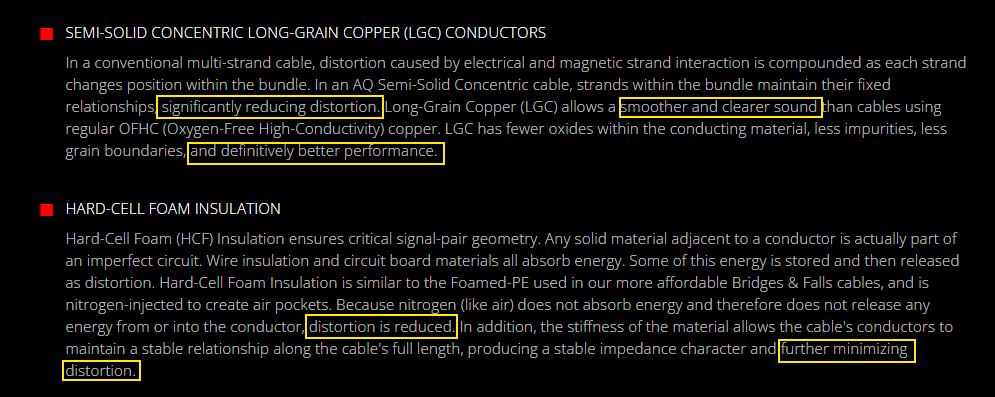

Panther coding says someone is after your pocketbook than delivering better sound. Here are the manufacturer claims:

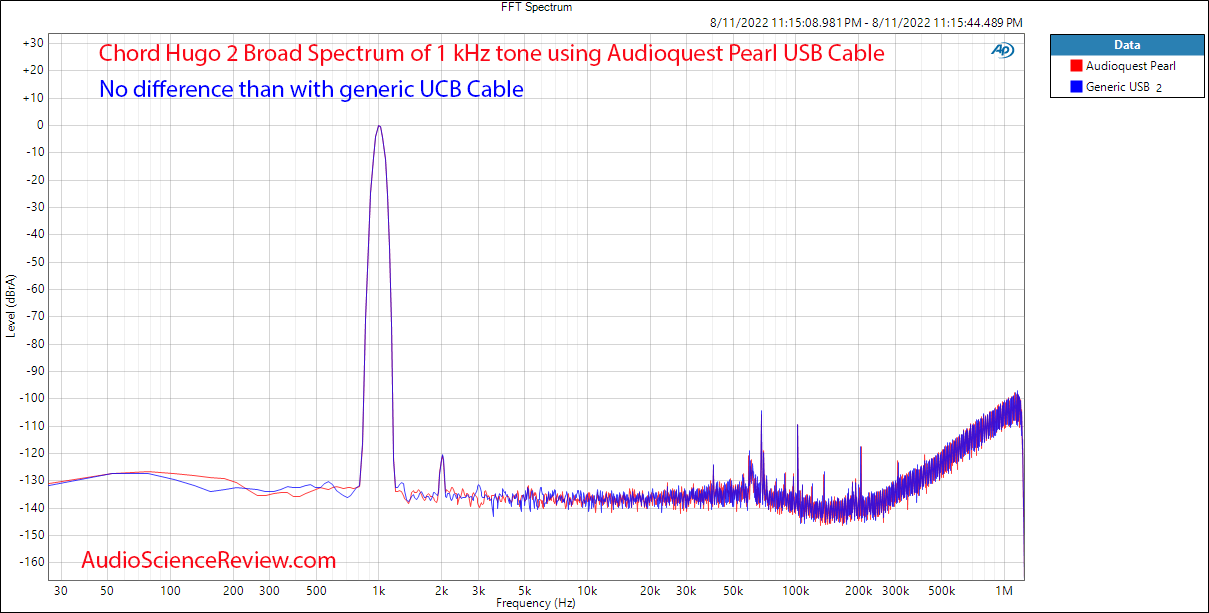

See all those mentions of reduced distortion. We can measure that. We do it day in and day out:

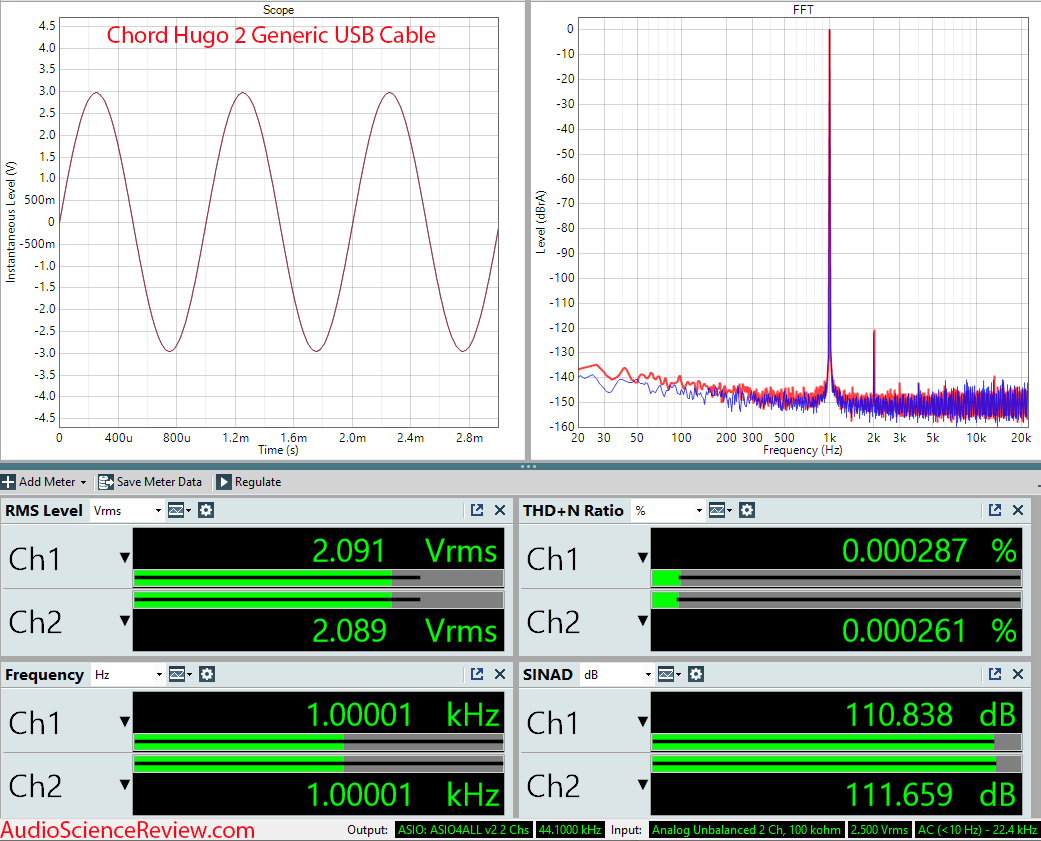

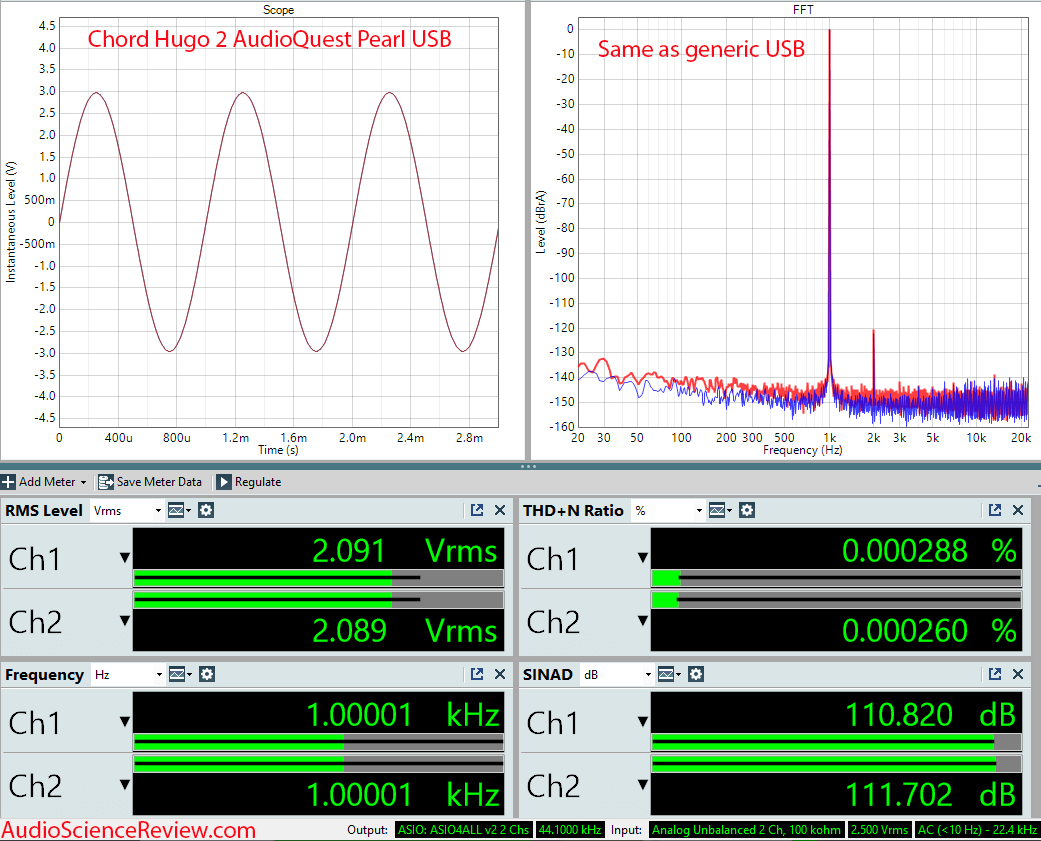

Now with AQ cable:

No distortion is reduced whatsoever. Even when measuring to 20X human bandwidth of hearing, nothing is changed as far as noise and distortion:

Here are my listening test results:

Audioquest Pearl Listening Test

I plugged my Dan Clark Stealth Headphone into the Hugo 2 DAC and started to listen. The Stealth is a sealed back headphone with the lowest distortion I have measured in a headphone. So if there is a difference, this is the most ideal way to hear it.

I queued up a track with lots of ambiance and delicate sounds and started to play with AudioQuest USB Cable. The sound was as wonderful as I remembered it. I then switched to Generic cable and instantly the sound was louder and there was better clarity all around! This effect quickly faded though in a few seconds indicating typical faulty sighted listening test effect. From then on, I could not detect any difference between the two cables.

Hence my summary of the review:

Conclusions

I know many of us consider these results "as expected" but it is always good to verify how time after time, very accurate measurements show no difference between generic/cheap and premium cables. That premium is not a lot in the case of AudioQuest Pearl USB cable. So if you want to get it, there is no major harm done. Just don’t expect any audible improvements from it.

I can’t recommend the Audioquest Pearl Cable if you are buying it for audio performance.

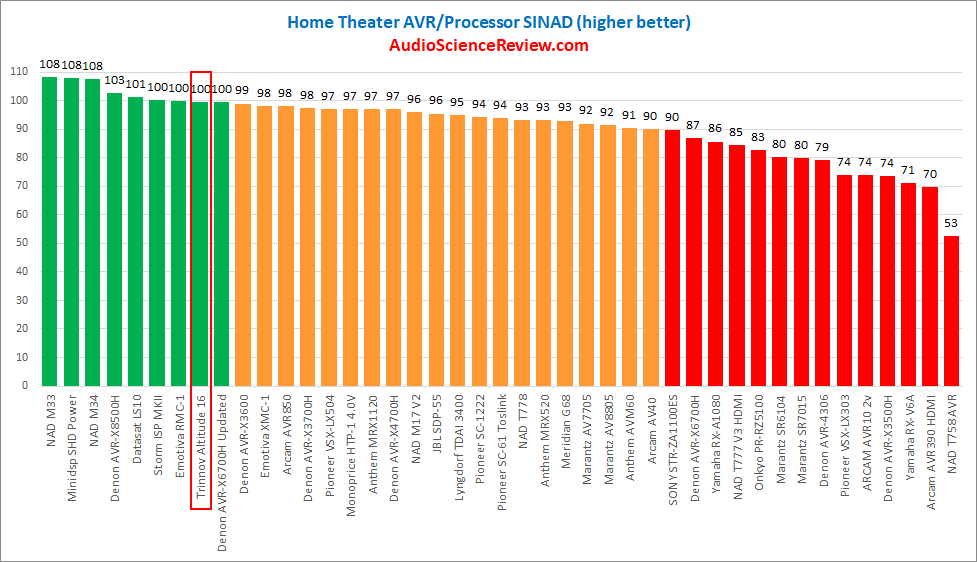

The TACT processor was expensive because such home theater processors have always been expensive. You can’t get them with balanced output for less than a few thousand dollars. High-end ones comparable to Tact today cost even more. Here is Trinnov Altitude 16 Review. It got a "good" rating from me even though it cost $17,000:

See? Not hard to understand what we do. Go and spend proper time on ASR and you would know. If you don’t, ask me questions.

|